Isaac ROS Visual Global Localization

Source code on GitHub.

Overview

This package includes the ROS node and launch files for visual global localization. It takes the global localization map along with raw or rectified stereo images as inputs and outputs the global pose. The package supports both single and multiple stereo image inputs.

Quickstart

Set Up Development Environment

Set up your development environment by following the instructions in getting started.

Clone

isaac_ros_commonunder${ISAAC_ROS_WS}/src.cd ${ISAAC_ROS_WS}/src && \ git clone -b release-3.2 https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_common.git isaac_ros_common

(Optional) Install dependencies for any sensors you want to use by following the sensor-specific guides.

Note

We strongly recommend installing all sensor dependencies before starting any quickstarts. Some sensor dependencies require restarting the Isaac ROS Dev container during installation, which will interrupt the quickstart process.

For Nova Carter Setup follow: Nova Carter Getting Started.

For x86 Rosbag Replay Testing follow:

Add your data volume to the Docker container by adding the following line to the

.isaac_ros_dev-dockerargsfile under scripts, for example:echo -e '-v /mnt/nova_ssd/recordings:/mnt/nova_ssd/recordings' > ${ISAAC_ROS_WS}/src/isaac_ros_common/scripts/.isaac_ros_dev-dockerargsAdd the extra image layer that is needed for mapping and localization. If you are not setting up the Nova Carter robot, remove

nova_carterfromCONFIG_IMAGE_KEY.echo -e "CONFIG_IMAGE_KEY=ros2_humble\nCONFIG_DOCKER_SEARCH_DIRS=(../docker)" > ${ISAAC_ROS_WS}/src/isaac_ros_common/scripts/.isaac_ros_common-configLaunch the Docker container using the

run_dev.shscript:cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Install or Build Isaac ROS Visual Global Localization

Install the prebuilt Debian package in the container:

sudo apt-get update

sudo apt-get install -y ros-humble-isaac-ros-visual-global-localization

Open a new terminal outside container and clone this repository under

${ISAAC_ROS_WS}/src:cd ${ISAAC_ROS_WS}/src && \ git clone -b release-3.2 https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_mapping_and_localization.git isaac_ros_mapping_and_localization

Launch container and use

rosdepto install the package’s dependencies:sudo apt-get update

rosdep update && rosdep install --from-paths ${ISAAC_ROS_WS}/src/isaac_ros_mapping_and_localization/isaac_ros_visual_global_localization --ignore-src -y

Build the package from source:

colcon build --symlink-install --packages-up-to isaac_ros_visual_global_localization --base-paths ${ISAAC_ROS_WS}/src/isaac_ros_mapping_and_localization/isaac_ros_visual_global_localization

Source the ROS workspace:

Note

Make sure to repeat this step in every terminal created inside the Docker container.

Because this package was built from source, the enclosing workspace must be sourced for ROS to be able to find the package’s contents.

source install/setup.bash

Localization with Rosbag Replay

We provide an end-to-end ROS launch file for replaying a rosbag and running global localization. It continuously run global localization on incoming frames, even after a localization pose has already been computed. It also activates the 3D Lidar to create a point cloud overlay on the occupancy map using the result poses.

Create a map by following Tutorial with Map Creation.

Record a localization rosbag with isaac_ros_nova_recorder Quickstart. Please keep the localization trajectory within 1m from the map trajectory’s coverage.

Launch container and run the following command to replay the localization rosbag and localize the robot:

ros2 launch isaac_ros_visual_global_localization run_cuvgl_rosbag_replay.launch.py \ rosbag_path:=$ROS_BAG_PATH \ camera_names:='front_back_left_right' \ vgl_map_dir:=${MAP_DIR}/cuvgl_map \ occupancy_map_yaml_file:=${MAP_DIR}/occupancy_map.yaml

Note

For live testing, please see ISAAC Perceptor Localization

Visualize Results

Visualize the /map, /front_3d_lidar/lidar_points_filtered, and /visual_localization/pose topics in RViz or Foxglove. The alignment between the point cloud and the occupancy map indicates the accuracy of the result pose, which is used to transform the point cloud into the occupancy map frame. The axes shows the localization pose, with the red axes pointing in the the robot’s forward direction.

Try More Examples

To continue your exploration, check out the following suggested examples:

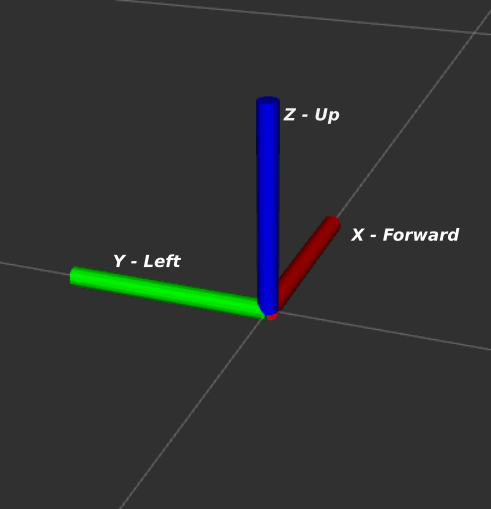

Coordinate Frames

This section describes the coordinate frames that are involved in the

VisualGlobalLocalizationNode. The frames discussed below are oriented as follows:

base_frame: The base frame of the robot or camera rig. It is assumed that all cameras are rigidly mounted to this frame, such that the transforms between thebase_frameand thecamera_optical_framescan assumed to be static. The estimated odometry and SLAM pose will represent the pose of this frame.map_frame: The frame corresponding to the origin of the map that cuVGL creates or localizes in. The SLAM pose reported by cuVGL corresponds with the transformmap_frame->base_frame.camera_optical_frames: The frames corresponding to the optical center of every camera. The frame is expected to lie at the center of the camera lens and oriented with the z-axis pointing in the view direction and the y-axis pointing to the floor.

Troubleshooting

Isaac ROS Troubleshooting

For solutions to problems with Isaac ROS, please check here.

Troubleshooting Suggestions

If localization frequently fails to return a pose, consider relaxing the rejection criteria, allowing it to return less confident results, by setting:

vgl_localization_precision_level:=0.To print more debug messages from the localization node, use launch parameters:

vgl_verbose_logging:=True vgl_init_glog:=True vgl_glog_v:=1.

API

Usage

ros2 launch isaac_ros_visual_global_localization isaac_ros_visual_global_localization_node.launch.py

ROS Parameters

ROS Parameter |

Type |

Default |

Description |

|---|---|---|---|

Functional Parameters |

|||

|

|

|

Number of cameras used. Set to 2 for single stereo camera. |

|

|

|

Camera ids for stereo camera pairs, in the form of “stereo0_left_camera_id,stereo0_right_camera_id,stereo1_left_camera_id…”. |

|

|

|

Maximum duration between image timestamps to still consider them synced. |

|

|

|

If enabled, will do rectification for the incoming images. |

|

|

|

Whether or not to continue triggering localization after a localization pose is successfully computed. |

|

|

|

If use_initial_guess is true, the localizer will do cuSFM-based localization. If it is false, the localizer will do global localization. |

|

|

|

Required, the directory of cuVGL map files. |

|

|

|

Required, the directory of config files, which should include |

|

|

|

The directory of model file. This value can be set in config file. |

|

|

|

The topic name of the input image. |

|

|

|

The topic name of the input camera info. |

|

|

|

The localization precision level: 0 for lowest precision, returning more poses; 1 for medium precision; 2 for highest precision, returning fewer poses. |

Frame Parameters |

|||

|

|

|

The frame name associated with the map origin. |

|

|

|

The frame name associated with the base of the robot or camera rig. |

|

|

|

The frame names associated with the cameras’ optical frames. Using ROS optical frame conventions, i.e. z-axis front, y-axis down. If not specified will use the frames from the camera info messages. |

Message Parameters |

|||

|

|

|

Buffer size used by the image synchronizer. |

|

|

|

QoS profile for the image subscribers. |

Output Parameters |

|||

|

|

|

Enable |

|

|

|

Invert the |

Debug/Visualization Parameters |

|||

|

|

|

If it is set, will generate debug images to this folder. |

|

|

|

Optional, the directory that saves the raw images of the map frames. |

|

|

|

Optional, if to publish the rectified images. This flag is used only when |

|

|

|

Optional, if to print more verbose ROS logs. |

|

|

|

Optional, if to initialize glog. Note that glog can be initialized only once per process, otherwise it raises error. |

|

|

|

Optional, the verbose level for glog. |

ROS Topics Subscribed

ROS Topic |

Interface |

Description |

|---|---|---|

|

Image from the i-th camera in grayscale (i is in range [0, |

|

|

Camera info from the i-th camera (i is in range [0, |

|

|

Pose used as tick for localizing in an existing map. Every received message will trigger a localization. |

ROS Topics Published

ROS Topic |

Interface |

Description |

|---|---|---|

|

Current pose with covariance of the |

|

|

Image for debugging. |

|

|

Rectified images from visual localization node, published only when |

|

|

Camera info for the rectified images, published only when |

|

|

Diagnostic message. Similar to |

ROS Services Advertised

ROS Service |

Interface |

Description |

|---|---|---|

|

A service to trigger localization the node. |