isaac_ros_visual_slam#

Source code available on GitHub.

Quickstart#

Set Up Development Environment#

Set up your development environment by following the instructions in getting started.

(Optional) Install dependencies for any sensors you want to use by following the sensor-specific guides.

Note

We strongly recommend installing all sensor dependencies before starting any quickstarts. Some sensor dependencies require restarting the development environment during installation, which will interrupt the quickstart process.

Download Quickstart Assets#

Download quickstart data from NGC:

Make sure required libraries are installed.

sudo apt-get install -y curl jq tar

Then, run these commands to download the asset from NGC:

NGC_ORG="nvidia" NGC_TEAM="isaac" PACKAGE_NAME="isaac_ros_visual_slam" NGC_RESOURCE="isaac_ros_visual_slam_assets" NGC_FILENAME="quickstart.tar.gz" MAJOR_VERSION=4 MINOR_VERSION=0 VERSION_REQ_URL="https://catalog.ngc.nvidia.com/api/resources/versions?orgName=$NGC_ORG&teamName=$NGC_TEAM&name=$NGC_RESOURCE&isPublic=true&pageNumber=0&pageSize=100&sortOrder=CREATED_DATE_DESC" AVAILABLE_VERSIONS=$(curl -s \ -H "Accept: application/json" "$VERSION_REQ_URL") LATEST_VERSION_ID=$(echo $AVAILABLE_VERSIONS | jq -r " .recipeVersions[] | .versionId as \$v | \$v | select(test(\"^\\\\d+\\\\.\\\\d+\\\\.\\\\d+$\")) | split(\".\") | {major: .[0]|tonumber, minor: .[1]|tonumber, patch: .[2]|tonumber} | select(.major == $MAJOR_VERSION and .minor <= $MINOR_VERSION) | \$v " | sort -V | tail -n 1 ) if [ -z "$LATEST_VERSION_ID" ]; then echo "No corresponding version found for Isaac ROS $MAJOR_VERSION.$MINOR_VERSION" echo "Found versions:" echo $AVAILABLE_VERSIONS | jq -r '.recipeVersions[].versionId' else mkdir -p ${ISAAC_ROS_WS}/isaac_ros_assets && \ FILE_REQ_URL="https://api.ngc.nvidia.com/v2/resources/$NGC_ORG/$NGC_TEAM/$NGC_RESOURCE/\ versions/$LATEST_VERSION_ID/files/$NGC_FILENAME" && \ curl -LO --request GET "${FILE_REQ_URL}" && \ tar -xf ${NGC_FILENAME} -C ${ISAAC_ROS_WS}/isaac_ros_assets && \ rm ${NGC_FILENAME} fi

Build isaac_ros_visual_slam#

Activate the Isaac ROS environment:

isaac-ros activateInstall the prebuilt Debian package:

sudo apt-get update

sudo apt-get install -y ros-jazzy-isaac-ros-visual-slam

Install Git LFS:

sudo apt-get install -y git-lfs && git lfs install

Clone this repository under

${ISAAC_ROS_WS}/src:cd ${ISAAC_ROS_WS}/src && \ git clone -b release-4.0 https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_visual_slam.git isaac_ros_visual_slam

Activate the Isaac ROS environment:

isaac-ros activateUse

rosdepto install the package’s dependencies:sudo apt-get update

rosdep update && rosdep install --from-paths ${ISAAC_ROS_WS}/src/isaac_ros_visual_slam/isaac_ros_visual_slam --ignore-src -y

Build the package from source:

cd ${ISAAC_ROS_WS}/ && \ colcon build --symlink-install --packages-up-to isaac_ros_visual_slam --base-paths ${ISAAC_ROS_WS}/src/isaac_ros_visual_slam/isaac_ros_visual_slam

Source the ROS workspace:

Note

Make sure to repeat this step in every terminal created inside the Isaac ROS environment.

Because this package was built from source, the enclosing workspace must be sourced for ROS to be able to find the package’s contents.

source install/setup.bash

Run Launch File#

Continuing inside the Isaac ROS environment, install the following dependencies:

sudo apt-get update

sudo apt-get install -y ros-jazzy-isaac-ros-examples

Run the following launch file to spin up a demo of this package using the quickstart rosbag:

ros2 launch isaac_ros_examples isaac_ros_examples.launch.py launch_fragments:=visual_slam \ interface_specs_file:=${ISAAC_ROS_WS}/isaac_ros_assets/isaac_ros_visual_slam/quickstart_interface_specs.json \ rectified_images:=false

Open a second terminal inside the Isaac ROS environment:

isaac-ros activateRun the rosbag file to simulate an image stream:

ros2 bag play ${ISAAC_ROS_WS}/isaac_ros_assets/isaac_ros_visual_slam/quickstart_bag --remap \ /front_stereo_camera/left/image_raw:=/left/image_rect \ /front_stereo_camera/left/camera_info:=/left/camera_info_rect \ /front_stereo_camera/right/image_raw:=/right/image_rect \ /front_stereo_camera/right/camera_info:=/right/camera_info_rect \ /back_stereo_camera/left/image_raw:=/rear_left/image_rect \ /back_stereo_camera/left/camera_info:=/rear_left/camera_info_rect \ /back_stereo_camera/right/image_raw:=/rear_right/image_rect \ /back_stereo_camera/right/camera_info:=/rear_right/camera_info_rect

Ensure that you have already set up your RealSense camera using the RealSense setup tutorial. If you have not, please set up the sensor and then restart this quickstart from the beginning.

Continuing inside the Isaac ROS environment, install the following dependencies:

sudo apt-get update

sudo apt-get install -y ros-jazzy-isaac-ros-examples ros-jazzy-isaac-ros-realsense

Run the following launch file to spin up a demo of this package using a RealSense camera:

ros2 launch isaac_ros_examples isaac_ros_examples.launch.py launch_fragments:=realsense_stereo_rect,visual_slam \ interface_specs_file:=${ISAAC_ROS_WS}/isaac_ros_assets/isaac_ros_visual_slam/quickstart_interface_specs.json \ base_frame:=camera_link camera_optical_frames:="['camera_infra1_optical_frame', 'camera_infra2_optical_frame']"

Ensure that you have already set up your ZED camera using ZED setup tutorial.

Continuing inside the Isaac ROS environment, install dependencies:

sudo apt-get update

sudo apt-get install -y ros-jazzy-isaac-ros-examples ros-jazzy-isaac-ros-stereo-image-proc ros-jazzy-isaac-ros-zed

Run the following launch file to spin up a demo of this package using a ZED Camera:

ros2 launch isaac_ros_examples isaac_ros_examples.launch.py \ launch_fragments:=zed_stereo_rect,visual_slam pub_frame_rate:=30.0 \ base_frame:=zed2_camera_center camera_optical_frames:="['zed2_left_camera_optical_frame', 'zed2_right_camera_optical_frame']" \ interface_specs_file:=${ISAAC_ROS_WS}/isaac_ros_assets/isaac_ros_visual_slam/zed2_quickstart_interface_specs.json

Note

If you are using the ZED X series, replace all zed2 in the base_frame, camera_optical_frames and interface_specs_file launch arguments with zedx.

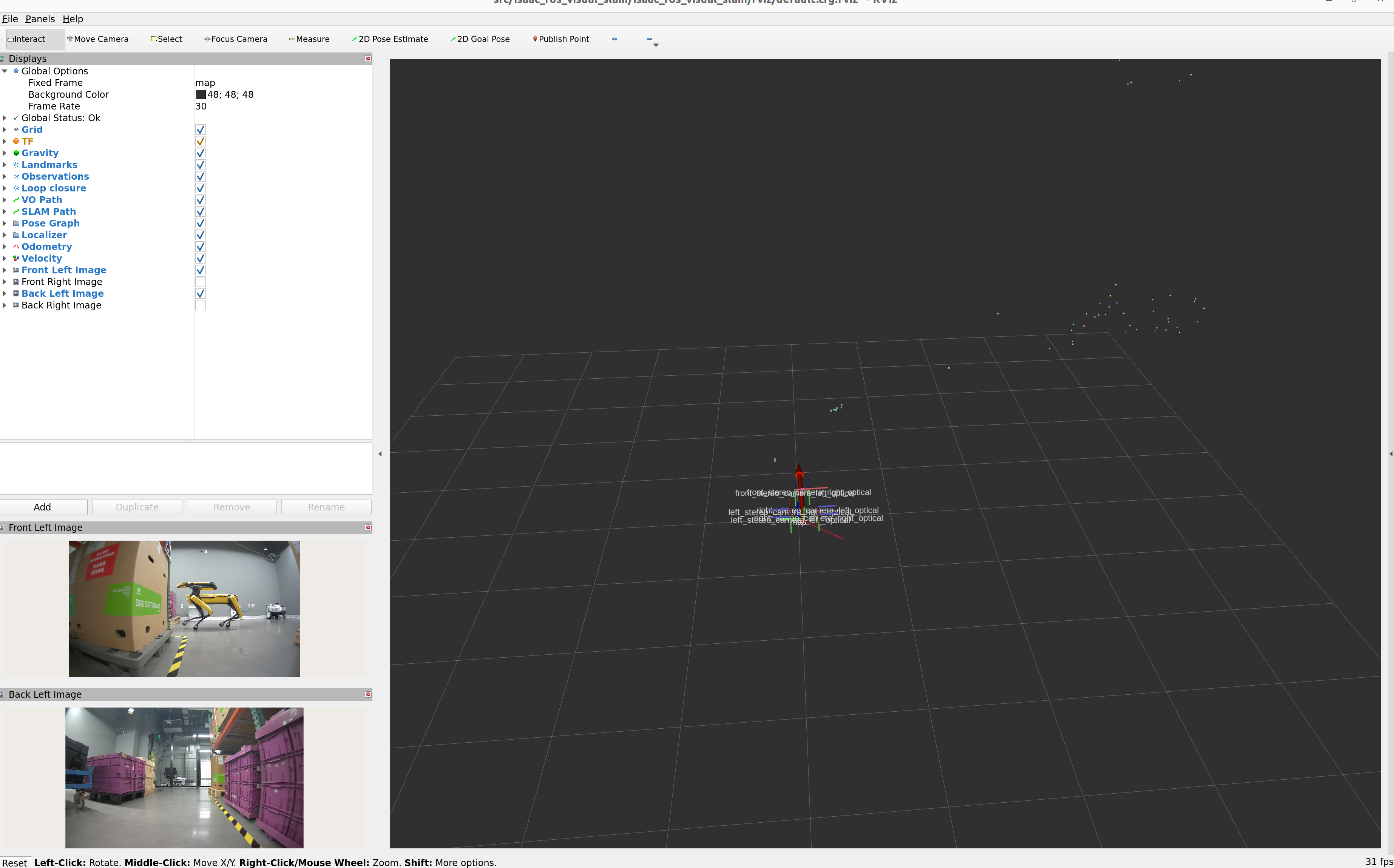

Visualize Results#

Try More Examples#

To continue your exploration, check out the following suggested examples:

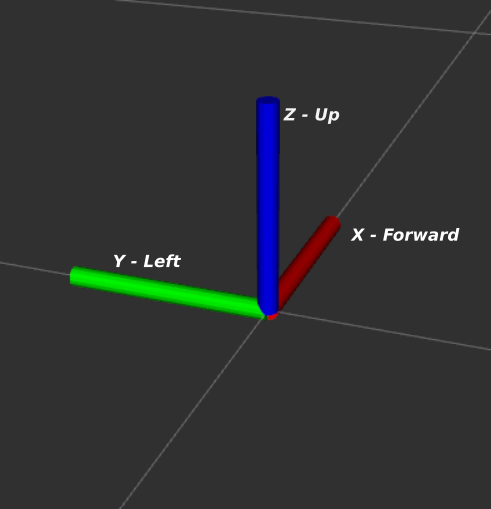

Coordinate Frames#

This section describes the coordinate frames that are involved in the

VisualSlamNode. The frames discussed below are oriented as follows:

base_frame: The base frame of the robot or camera rig. It is assumed that all cameras are rigidly mounted to this frame, such that the transforms between thebase_frameand thecamera_optical_framescan assumed to be static. The estimated odometry and SLAM pose will represent the pose of this frame.map_frame: The frame corresponding to the origin of the map that cuVSLAM creates or localizes in. The SLAM pose reported by cuVSLAM corresponds with the transformmap_frame->base_frame.odom_frame: The frame corresponding to the origin of the odometry that cuVSLAM estimates. The odometry pose reported by cuVSLAM corresponds with the transformodom_frame->base_frame.camera_optical_frames: The frames corresponding to the optical center of every camera. The frame is expected to lie at the center of the camera lens and oriented with the z-axis pointing in the view direction and the y-axis pointing to the floor.

Troubleshooting#

Isaac ROS Troubleshooting#

For solutions to problems with Isaac ROS, please check here.

Troubleshooting Suggestions#

If the target frames in RViz are being updated at a lower rate than the input image rate:

Check if the input image frame rate is equal to the value set in the launch file by opening a new terminal and running the command

ros2 topic hz --window 100 <image_topic_name>

If RViz is not showing the poses, check the Fixed Frame value.

If you are seeing

Visual tracking is lost.messages frequently, it could be caused by the following issues:Fast motion causing the motion blur in the frames

Low-lighting conditions.

The wrong

camera_infois being published.

For better performance:

Increase the capture framerate from the camera to yield a better tracking result.

If input images are noisy, you can use the

denoise_input_imagesflag in the node.

For RealSense cameras check that:

You are using the firmware and

realsense_rosversions recommended in RealSense setup tutorial.You are using the parameters set in the RealSense tutorial launch file

If the pose estimate frames in RViz is drifting from actual real-world poses and you see white dots on nearby objects(refer to the screenshot below), this means that the emitter of the RealSense camera is on and it needs to be turned off.

Left and right images being published are not compressed or encoded. Isaac ROS Visual Slam expects raw images.

For global localization:

Isaac ROS Visual SLAM does not yet support global localization and needs an external system to provide a pose hint in order to localize in an existing map.

We recommend to use Isaac ROS Visual Global Localization for global localization.

API#

Usage#

ros2 launch isaac_ros_visual_slam isaac_ros_visual_slam.launch.py

ROS Parameters#

ROS Parameter |

Type |

Default |

Description |

|---|---|---|---|

Functional Parameters |

|||

|

|

|

Number of cameras used. Set to 2 for a single stereo camera. Maximum value is 32. |

|

|

|

Minimum number of images required to track the pose. If less than |

|

|

|

Maximum duration between image timestamps to still consider them synced. |

|

|

|

If enabled, input images are denoised. It can be enabled when images are noisy because of low-light conditions. |

|

|

|

Flag to mark if the incoming images are rectified or raw. |

|

|

|

If enabled, odometry poses will be modified such that the camera moves on a horizontal plane. |

|

|

|

If enabled, SLAM poses will be modified such that the camera moves on a horizontal plane. |

|

|

|

If enabled, SLAM mode is on. If disabled, only odometry is on. |

|

|

|

If enabled, IMU data is used. |

|

|

|

White noise parameter for gyroscope based on IMU Noise Model. |

|

|

|

Random walk parameter for gyroscope based on IMU Noise Model. |

|

|

|

White noise parameter for accelerometer based on IMU Noise Model. |

|

|

|

Random walk parameter for accelerometer based on IMU Noise Model. |

|

|

|

IMU frequency used for generating noise model parameters. |

|

|

|

Acceptable time delta between consecutive synced image messages. If the messages exceed the threshold, it might be an indication of message drop from the source. |

|

|

|

Acceptable time delta between consecutive IMU messages. If the messages exceed the threshold, it might be an indication of message drop from the source. |

|

|

|

The number of pixels of the upper border of the image will be masked at the stage of selecting features for visual tracking. |

|

|

|

The number of pixels of the lower border of the image will be masked at the stage of selecting features for tracking. |

|

|

|

The number of pixels of the left border of the image from the left camera of the stereo pair (the right border for the right camera of a stereo pair) will be masked at the stage of selecting features for tracking. |

|

|

|

The number of pixels of the right border of the image from the left camera of the stereo pair (the left border for the right camera of a stereo pair) will be masked at the stage of selecting features for tracking. |

|

|

|

If set the map will be stored in the set path after shutting down the node. Requires |

|

|

|

If set the map will be loaded from the set path on starting up the node. Requires |

|

|

|

If set will try to localize in the |

|

|

|

Radius of the area on the horizontal plane used for localization in meters. |

|

|

|

Radius along the vertical axis used for localization in meters. |

|

|

|

Step size along each axis on the horizontal plane for localization in meters. |

|

|

|

Step size along vertical axis for localization in meters. |

|

|

|

Step size around vertical axis for localization in radians. Must be less then 2 Pi. |

|

|

|

Maximum number of poses that is stored in the SLAM map. Default is 300. Adding more poses will invoke reduction of the map until it`s size matches this constant. |

|

|

|

If set will request a second hint when hinted localization fails with the previous hint. |

Frame Parameters |

|||

|

|

|

The frame name associated with the map origin. |

|

|

|

The frame name associated with the odometry origin. |

|

|

|

The frame name associated with the base of the robot or camera rig. |

|

|

|

The frame names associated with the cameras’ optical frames. Using ROS optical frame conventions, i.e. z-axis front, y-axis down. If not specified will use the frames from the camera info messages. |

|

|

|

The frame name associated with the IMU. |

Message Parameters |

|||

|

|

|

Buffer size used by the image synchronizer. |

|

|

|

Buffer size used by the IMU sequencer. |

|

|

|

QoS profile for the image subscribers. |

|

|

|

QoS profile for the IMU subscriber. |

Output Parameters |

|||

|

|

|

Override timestamp received from the left image with the timestamp from |

|

|

|

Enable |

|

|

|

Enable |

|

|

|

Invert the |

|

|

|

Invert the |

Debug/Visualization Parameters |

|||

|

|

|

Main flag to enable or disable visualization. |

|

|

|

If enabled, 2D feature pointcloud will be available for visualization. |

|

|

|

If enabled, landmark pointcloud will be available for visualization. |

|

|

|

The maximum size of the buffer for pose trail visualization. |

|

|

|

Verbosity for cuVSLAM, 0 is silent. |

|

|

|

If enabled, a debug dump (images, IMU messages, timestamps, and camera info) is saved to the disk at the path indicated by |

|

|

|

The path to the directory to store the debug dump data. |

ROS Topics Subscribed#

ROS Topic |

Interface |

Description |

|---|---|---|

|

Image from the i-th camera in grayscale (i is in range [0, |

|

|

Camera info from the i-th camera (i is in range [0, |

|

|

Sensor data from the IMU (is only present if |

|

|

Pose used as pose hint for localizing in an existing map. Every received message will trigger a localization. |

|

|

A message will be sent on this topic when the node requires a new pose hint for localization. |

ROS Topics Published#

ROS Topic |

Interface |

Description |

|---|---|---|

|

Current odometry of the |

|

|

Current pose with covariance of the |

|

|

Current pose of the |

|

|

Trail of poses generated by SLAM. |

|

|

Trail of poses generated by pure Visual Odometry. |

|

|

Status message for diagnostics. |

|

|

Diagnostic message. Similar to |

|

Visualization Topics |

||

|

Visualization containing all current observations. |

|

|

Visualization containing the currently estimated gravity vector. |

|

|

Visualization containing the currently estimated velocity. |

|

|

Visualization containing the currently estimated odometry from SLAM poses. |

|

|

Visualization containing the 3D SLAM landmarks from the map. |

|

|

Visualization containing the 3D SLAM landmarks currently used for loop closure. |

|

|

Visualization containing the pose graph nodes. |

|

|

Visualization containing the pose graph edges. |

|

|

Visualization containing the pose graph edges2. |

|

|

Visualization containing the poses used for localization. |

|

|

Visualization containing the map used for localization. |

|

|

Visualization containing observations used for the localization. |

|

|

Visualization containing landmarks used for loop closure in the localization. |

ROS Services Advertised#

ROS Service |

Interface |

Description |

|---|---|---|

|

A service to reset the node. |

|

|

A service to get the series of poses for the path traversed. |

|

|

A service to set the estimated SLAM pose of the |

|

|

A service to store the current map to disk. |

|

|

A service to load a map from disk. |

|

|

A service to localize in an existing map with a pose hint. |

Note

The last 4 services only work in SLAM mode i.e. set enable_localization_n_mapping = true.