Attention

As of June 30, 2025, the Isaac ROS Buildfarm for Isaac ROS 2.1 on Ubuntu 20.04 Focal is no longer supported.

Due to an isolated infrastructure event, all ROS 2 Humble Debian packages that were previously built for Ubuntu 20.04 are no longer available in the Isaac Apt Repository. All artifacts for Isaac ROS 3.0 and later are built and maintained with a more robust pipeline.

Users are encouraged to migrate to the latest version of Isaac ROS. The source code for Isaac ROS 2.1

continues to be available on the release-2.1 branches of the Isaac ROS

GitHub repositories.

The original documentation for Isaac ROS 2.1 is preserved below.

isaac_ros_visual_slam

Source code on GitHub.

Quickstart

Set up your development environment by following the instructions here.

Note:

${ISAAC_ROS_WS}is defined to point to either/ssd/workspaces/isaac_ros-dev/or~/workspaces/isaac_ros-dev/.Clone

isaac_ros_commonand this repository under${ISAAC_ROS_WS}/src.cd ${ISAAC_ROS_WS}/src

git clone https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_common.git

git clone https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_visual_slam.git

[Terminal 1] Launch the Docker container

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh ${ISAAC_ROS_WS}

[Terminal 1] Install this package’s dependencies.

sudo apt-get install -y ros-humble-isaac-ros-visual-slam

Run the following launch files in the current terminal (Terminal 1):

ros2 launch isaac_ros_visual_slam isaac_ros_visual_slam.launch.py

[Terminal 2] Attach another terminal to the running container for RViz2

Attach another terminal to the running container for RViz2.

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh ${ISAAC_ROS_WS}

From this second terminal, run RViz2 to display the output:

rviz2 -d src/isaac_ros_visual_slam/isaac_ros_visual_slam/rviz/default.cfg.rviz

If you are SSHing in from a remote machine, the RViz2 window should be forwarded to your remote machine.

[Terminal 3] Attach the 3rd terminal to start the rosbag

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh ${ISAAC_ROS_WS}

Run the rosbag file to start the demo.

ros2 bag play src/isaac_ros_visual_slam/isaac_ros_visual_slam/test/test_cases/rosbags/small_pol_test/

Result:

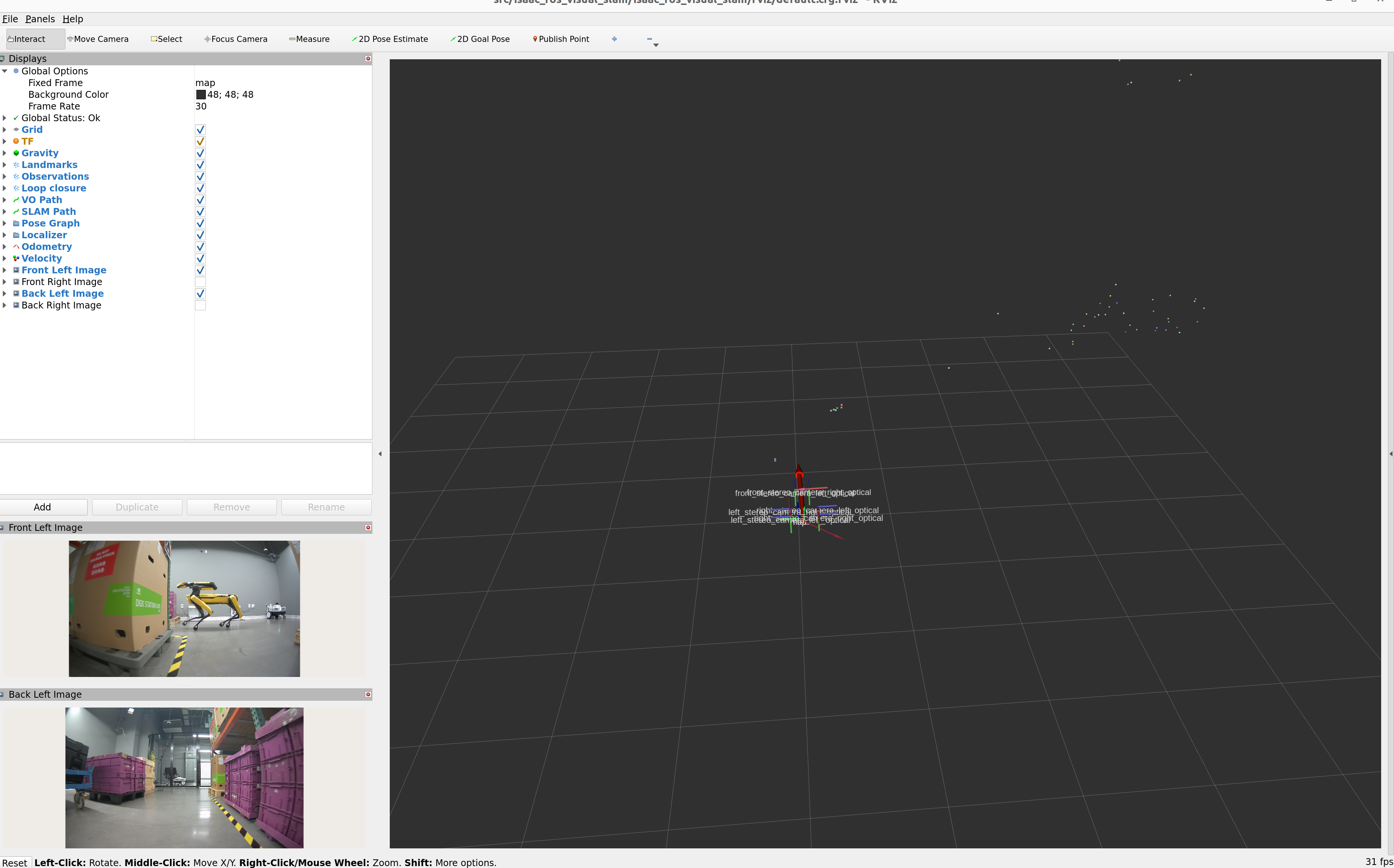

RViz should start displaying the point clouds and poses like below:

Try More Examples

To continue your exploration, check out the following suggested examples:

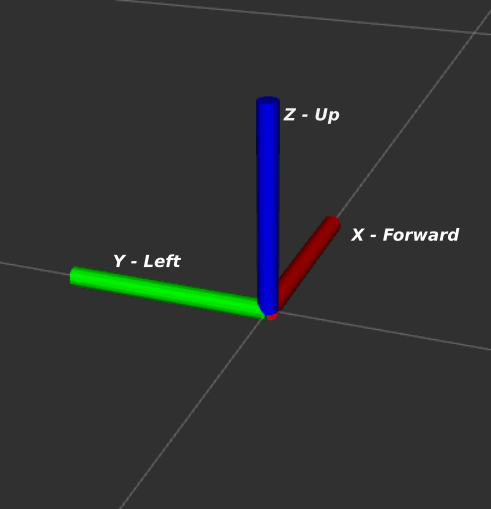

Coordinate Frames

This section describes the coordinate frames that are involved in the

VisualSlamNode. The frames discussed below are oriented as follows:

input_base_frame: The name of the frame used to calculate transformation between base link and left camera. The default value is empty (‘’), which means the value ofbase_frame_will be used. Ifinput_base_frame_andbase_frame_are both empty, the left camera is assumed to be in the robot’s center.input_left_camera_frame: The frame associated with left eye of the stereo camera. Note that this is not the same as the optical frame. The default value is empty (‘’), which means the left camera is in the robot’s center andleft_pose_rightwill be calculated from the CameraInfo message.input_right_camera_frame: The frame associated with the right eye of the stereo camera. Note that this is not the same as the optical frame. The default value is empty (‘’), which means left and right cameras have identity rotation and are horizontally aligned, soleft_pose_rightwill be calculated from CameraInfo.input_imu_frame: The frame associated with the IMU sensor (if available). It is used to calculateleft_pose_imu.

Troubleshooting

Isaac ROS Troubleshooting

For solutions to problems with Isaac ROS, please check here.

Troubleshooting Suggestions

If the target frames in RViz are being updated at a lower rate than the input image rate:

Check if the input image frame rate is equal to the value set in the launch file by opening a new terminal and running the command

ros2 topic hz --window 100 <image_topic_name>

If RViz is not showing the poses, check the Fixed Frame value.

If you are seeing

Visual tracking is lost.messages frequently, it could be caused by the following issues:Fast motion causing the motion blur in the frames

Low-lighting conditions.

The wrong

camerainfois being published.

For better performance:

Increase the capture framerate from the camera to yield a better tracking result.

If input images are noisy, you can use the

denoise_input_imagesflag in the node.

For RealSense cameras-

The firmware of the RealSense camera is 5.14.0 or newer. Check the RealSense firmware-update-tool page for details on how to check the version and update the camera firmware.

You are using the 4.51.1 tagged release for the realsense-ros package.

You are using the parameters set in the RealSense tutorial launch file

If the pose estimate frames in RViz is drifting from actual real-world poses and you see white dots on nearby objects(refer to the screenshot below), this means that the emitter of the RealSense camera is on and it needs to be turned off.

Left and right images being published are not compressed or encoded. Isaac ROS Visual Slam expects raw images.

API

Usage

ros2 launch isaac_ros_visual_slam isaac_ros_visual_slam.launch.py

ROS Parameters

ROS Parameter |

Type |

Default |

Description |

|---|---|---|---|

|

|

|

If enabled, input images are denoised. It can be enabled when images are noisy because of low-light conditions. |

|

|

|

Flag to mark if the incoming images are rectified or raw. |

|

|

|

If enabled, IMU data is used. |

|

|

|

White noise parameter for gyroscope based on IMU Noise Model. |

|

|

|

Random walk parameter for gyroscope based on IMU Noise Model. |

|

|

|

White noise parameter for accelerometer based on IMU Noise Model. |

|

|

|

Random walk parameter for accelerometer based on IMU Noise Model. |

|

|

|

IMU frequency used for generating noise model parameters. |

|

|

|

Acceptable time delta between previous and current pair of image frames. If a pair of image frames exceeds the threshold, it might be an indication of frame drop from the source. |

|

|

|

If enabled, prints logs from cuVSLAM library. |

|

|

|

If enabled, slam poses will be modified such that the camera moves on a horizontal plane. |

|

|

|

If enabled, a debug dump (image frames, timestamps, and camera info) is saved on to the disk at the path indicated by |

|

|

|

The path to the directory to store the debug dump data. |

|

|

|

Name of the frame ( |

|

|

|

The name of the left camera frame. the default value is empty, which means the left camera is in the robot’s center and |

|

|

|

The name of the right camera frame. The default value is empty, which means left and right cameras have identity rotation and are horizontally aligned. |

|

|

|

Defines the name of the IMU frame used to calculate |

|

|

|

The frame name associated with the map origin. |

|

|

|

The frame name associated with the odometry origin. |

|

|

|

The frame name associated with the robot. |

|

|

|

If enabled, 2D feature pointcloud will be available for visualization. |

|

|

|

If enabled, landmark pointcloud will be available for visualization. |

|

|

|

Main flag to enable or disable visualization. |

|

|

|

If enabled, SLAM mode is on. If disabled, only Visual Odometry is on. |

|

|

|

The maximum size of the buffer for pose trail visualization. |

|

|

|

Enable |

|

|

|

Enable |

|

|

|

Invert the |

|

|

|

Invert the |

|

|

|

String name for the |

|

|

|

String name for the |

|

|

|

String name for the |

|

|

|

Override timestamp received from the left image with the timestamp from |

|

|

|

Image + Camera Info Synchronizer message filter queue size. |

|

|

|

QoS profile for the left and right image subscribers. |

ROS Topics Subscribed

ROS Topic |

Interface |

Description |

|---|---|---|

|

The image from the left eye of the stereo camera in grayscale. |

|

|

CameraInfo from the left eye of the stereo camera. |

|

|

The image from the right eye of the stereo camera in grayscale. |

|

|

CameraInfo from the right eye of the stereo camera. |

|

|

Sensor data from the IMU(optional). |

ROS Topics Published

ROS Topic |

Interface |

Description |

|---|---|---|

|

Odometry messages for the |

|

|

Current pose with covariance of the |

|

|

Current pose of the |

|

|

Trail of poses generated by SLAM. |

|

|

Trail of poses generated by pure Visual Odometry. |

|

|

Status message for diagnostics. |

ROS Services Advertised

ROS Service |

Interface |

Description |

|---|---|---|

|

A service to reset the node. |

|

|

A service to get the series of poses for the path traversed. |

|

|

A service to set the pose of the odometry frame. |

ROS Actions Advertised

ROS Action |

Interface |

Description |

|---|---|---|

|

An action to save the landmarks and pose graph into a map and onto the disk. |

|

|

An action to load the map from the disk and localize within it given a prior pose. |