Attention

As of June 30, 2025, the Isaac ROS Buildfarm for Isaac ROS 2.1 on Ubuntu 20.04 Focal is no longer supported.

Due to an isolated infrastructure event, all ROS 2 Humble Debian packages that were previously built for Ubuntu 20.04 are no longer available in the Isaac Apt Repository. All artifacts for Isaac ROS 3.0 and later are built and maintained with a more robust pipeline.

Users are encouraged to migrate to the latest version of Isaac ROS. The source code for Isaac ROS 2.1

continues to be available on the release-2.1 branches of the Isaac ROS

GitHub repositories.

The original documentation for Isaac ROS 2.1 is preserved below.

isaac_ros_dope

Source code on GitHub.

Quickstart

Warning

Step 7 must be performed on x86_64. The resultant

model should be copied over to the Jetson. Also note that the

process of model preparation differs significantly from the other

repositories.

Set up your development environment by following the instructions here

Clone

isaac_ros_commonand this repository under${ISAAC_ROS_WS}/src.cd ${ISAAC_ROS_WS}/src

git clone https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_common.git

git clone https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_pose_estimation.git

Pull down a ROS Bag of sample data:

cd ${ISAAC_ROS_WS}/src/isaac_ros_pose_estimation && \ git lfs pull -X "" -I "resources/rosbags/"

Launch the Docker container using the

run_dev.shscript:cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Install this package’s dependencies.

sudo apt-get install -y ros-humble-isaac-ros-dope ros-humble-isaac-ros-tensor-rt ros-humble-isaac-ros-dnn-image-encoder

Make a directory to place models (inside the Docker container):

mkdir -p /tmp/models/

Select a DOPE model by visiting the DOPE model collection available on the official DOPE GitHub repository here. The model is assumed to be downloaded to

~/Downloadsoutside the Docker container.This example will use

Ketchup.pth, which should be downloaded into/tmp/modelsinside the Docker container:Note

This should be run outside the Docker container

On

x86_64:cd ~/Downloads && \ docker cp Ketchup.pth isaac_ros_dev-x86_64-container:/tmp/models

Convert the PyTorch file into an ONNX file: Warning: this step must be performed on

x86_64. The resultant model will be assumed to have been copied to theJetsonin the same output location (/tmp/models/Ketchup.onnx)python3 /workspaces/isaac_ros-dev/src/isaac_ros_pose_estimation/isaac_ros_dope/scripts/dope_converter.py --format onnx --input /tmp/models/Ketchup.pth

If you are planning on using Jetson, copy the generated

.onnxmodel into the Jetson, and then copy it over intoaarch64Docker container.We will assume that you already performed the transfer of the model onto the Jetson in the directory

~/Downloads.Enter the Docker container in Jetson:

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Make a directory called

/tmp/modelsin Jetson:mkdir -p /tmp/models

Outside the container, copy the generated

onnxmodel:cd ~/Downloads && \ docker cp Ketchup.onnx isaac_ros_dev-aarch64-container:/tmp/models

Run the following launch files to spin up a demo of this package:

Launch

isaac_ros_dope:ros2 launch isaac_ros_dope isaac_ros_dope_tensor_rt.launch.py model_file_path:=/tmp/models/Ketchup.onnx engine_file_path:=/tmp/models/Ketchup.plan

Then open another terminal, and enter the Docker container again:

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Then, play the ROS bag:

ros2 bag play -l src/isaac_ros_pose_estimation/resources/rosbags/dope_rosbag/

Open another terminal window and attach to the same container. You should be able to get the poses of the objects in the images through

ros2 topic echo:In a third terminal, enter the Docker container again:

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

ros2 topic echo /poses

Note

We are echoing

/posesbecause we remapped the original topic/dope/pose_arraytoposesin the launch file.Now visualize the pose array in RViz2:

rviz2

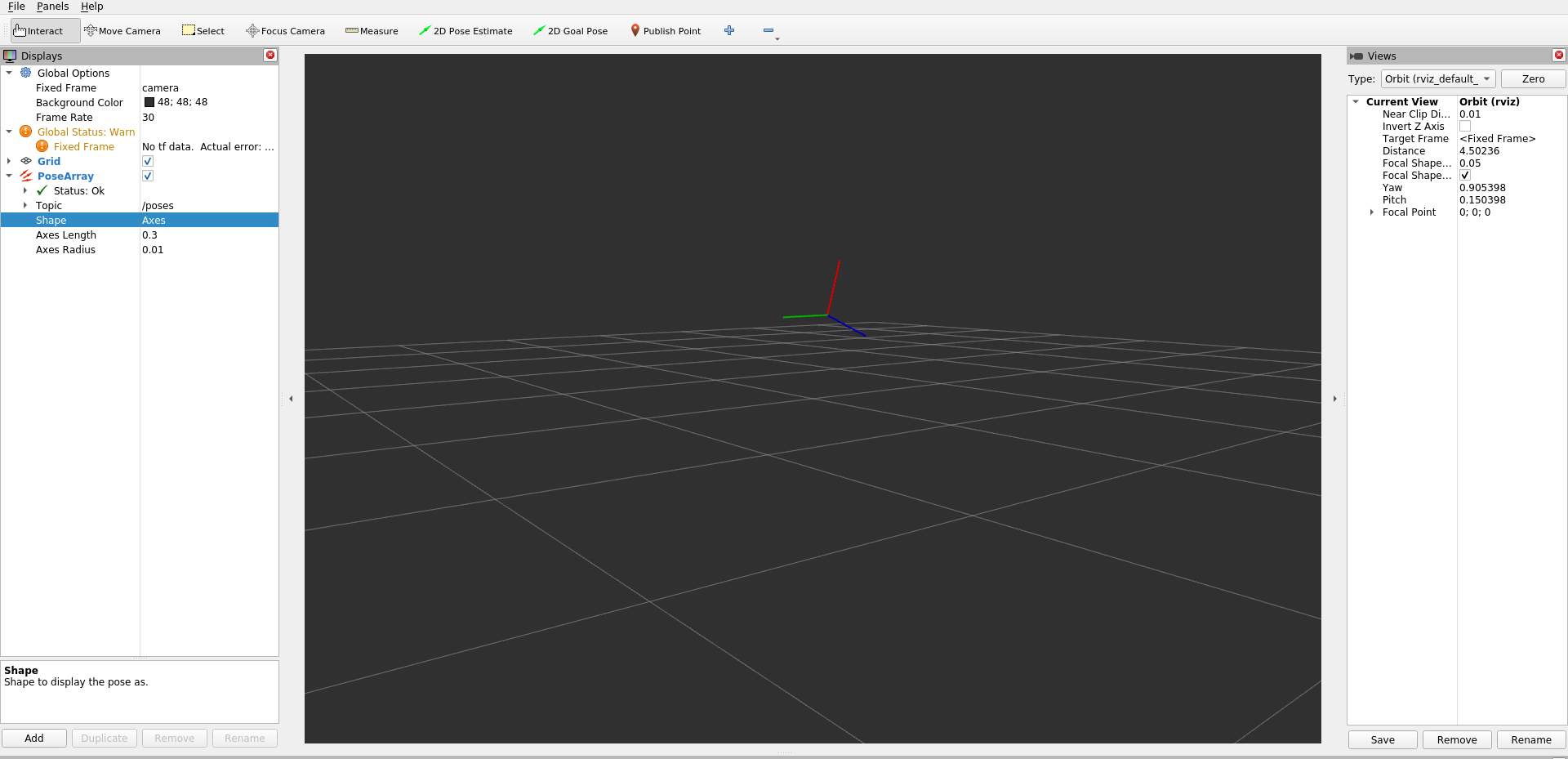

Then click on the

Addbutton, selectBy topicand choosePoseArrayunder/poses. Finally, change the display to show an axes by updatingShapeto beAxes, as shown in the screenshot below. Make sure to update theFixed Frametotf_camera.

Note

For best results, crop or resize input images to the same dimensions your DNN model is expecting.

Try More Examples

To continue your exploration, check out the following suggested examples:

Use Different Models

Click here for more information on how to use NGC models.

Alternatively, consult the DOPE model repository to try other models.

Model Name |

Use Case |

|---|---|

The DOPE model repository. This should be used if |

Troubleshooting

Isaac ROS Troubleshooting

For solutions to problems with Isaac ROS, please check here.

Deep Learning Troubleshooting

For solutions to problems with using DNN models, please check here.

API

Usage

Two launch files are provided to use this package. The first launch file launches isaac_ros_tensor_rt, whereas the other one uses isaac_ros_triton, along with

the necessary components to perform encoding on images and decoding of the DOPE network’s output.

Warning

For your specific application, these launch files may need to be modified. Please consult the available components to see the configurable parameters.

Launch File |

Components Used |

|---|---|

|

|

|

Warning

There is also a config file that should be modified in

isaac_ros_dope/config/dope_config.yaml.

DopeDecoderNode

ROS Parameters

ROS Parameter |

Type |

Default |

Description |

|---|---|---|---|

|

|

|

The name of the configuration file to parse. Note: The node will look for that file name under |

|

|

|

The object class the DOPE network is detecting and the DOPE decoder is interpreting. This name should be listed in the configuration file along with its corresponding cuboid dimensions. |

Configuration File

The DOPE configuration file, which can be found at isaac_ros_dope/config/dope_config.yaml may need to modified. Specifically, you will need to specify an object type in the DopeDecoderNode that is listed in the dope_config.yaml file, so the DOPE decoder node will pick the right parameters to transform the belief maps from the inference node to object poses. The dope_config.yaml file uses the camera intrinsics of RealSense by default - if you are using a different camera, you will need to modify the camera_matrix field with the new, scaled (640x480) camera intrinsics.

Note

The object_name should correspond to one of the objects listed in the DOPE configuration file, with the corresponding model used.

ROS Topics Subscribed

ROS Topic |

Interface |

Description |

|---|---|---|

|

The tensor that represents the belief maps, which are outputs from the DOPE network. |

ROS Topics Published

ROS Topic |

Interface |

Description |

|---|---|---|

|

An array of poses of the objects detected by the DOPE network and interpreted by the DOPE decoder node. |