Attention

As of June 30, 2025, the Isaac ROS Buildfarm for Isaac ROS 2.1 on Ubuntu 20.04 Focal is no longer supported.

Due to an isolated infrastructure event, all ROS 2 Humble Debian packages that were previously built for Ubuntu 20.04 are no longer available in the Isaac Apt Repository. All artifacts for Isaac ROS 3.0 and later are built and maintained with a more robust pipeline.

Users are encouraged to migrate to the latest version of Isaac ROS. The source code for Isaac ROS 2.1

continues to be available on the release-2.1 branches of the Isaac ROS

GitHub repositories.

The original documentation for Isaac ROS 2.1 is preserved below.

isaac_ros_tensor_rt

Source code on GitHub.

Quickstart

Note

This quickstart demonstrates isaac_ros_tensor_rt in an image segmentation application.

Therefore, this demo features an encoder and decoder node to perform

pre-processing and post-processing respectively. In reality, the

raw inference result is simply a tensor.

To use the packages in other useful contexts, please refer here.

Follow steps 1-6 of the Quickstart with Triton

Install this package’s dependencies.

sudo apt-get install -y ros-humble-isaac-ros-tensor-rt

Run the following launch files to spin up a demo of this package. Launch TensorRT:

ros2 launch isaac_ros_unet isaac_ros_unet_tensor_rt.launch.py engine_file_path:=/tmp/models/peoplesemsegnet_shuffleseg/1/model.plan input_binding_names:=['input_2:0'] output_binding_names:=['argmax_1'] network_output_type:='argmax'

Then open another terminal, and enter the Docker container again:

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Then, play the ROS bag from

isaac_ros_image_segmentation:ros2 bag play -l src/isaac_ros_image_segmentation/resources/rosbags/unet_sample_data/

Visualize and validate the output of the package:

In a third terminal, enter the Docker container:

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Then echo the inference result:

ros2 topic echo /tensor_sub

The expected result should look like this:

header: stamp: sec: <time> nanosec: <time> frame_id: <frame-id> tensors: - name: output_tensor shape: rank: 4 dims: - 1 - 544 - 960 - 1 data_type: 5 strides: - 2088960 - 3840 - 4 - 4 data: [...]

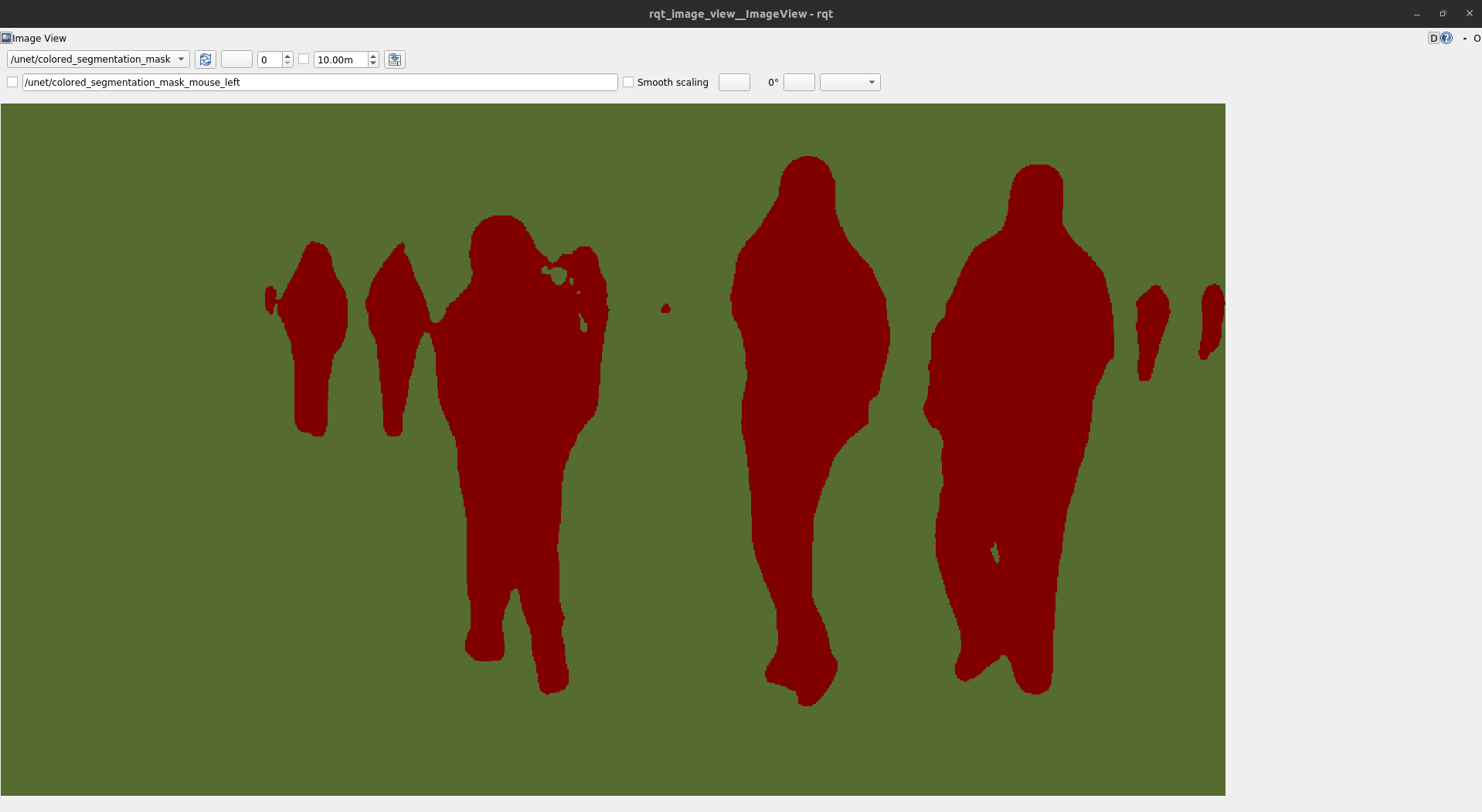

This result is not very useful friendly. It’s typically more desirable to see the network output after it’s decoded. The result of the entire image segmentation pipeline can be visualized by launching

rqt_image_view:ros2 run rqt_image_view rqt_image_view

Then inside the

rqt_image_viewGUI, change the topic to/unet/colored_segmentation_maskto view a colorized segmentation mask.

Note

A launch file called

isaac_ros_tensor_rt.launch.pyis provided in this package to launch only TensorRT.Warning

The TensorRT Inference node expects tensors as input and outputs tensors.

The node inference itself is generic; as long as the input data can be converted into a tensor, and the model that is used is correctly trained on said input data.

For example, TensorRT can be used with models that are trained on images, audio and more, but the necessary encoder and decoder node must be implemented.

Troubleshooting

Isaac ROS Troubleshooting

For solutions to problems with Isaac ROS, please check here.

Deep Learning Troubleshooting

For solutions to problems with using DNN models, please check here.

API

Usage

This package contains a launch file that solely launches isaac_ros_tensor_rt.

Warning

For your specific application, these launch files may need to be modified. Please consult the available components to see the configurable parameters.

Additionally, for most applications, an encoder node for pre-processing your data source and decoder for post-processing the inference output is required.

Launch File |

Components Used |

|---|---|

|

TensorRTNode

ROS Parameters

ROS Parameter |

Type |

Default |

Description |

|---|---|---|---|

|

|

|

The absolute path to your model file in the local file system (the model file must be |

|

|

|

The absolute path to either where you want your TensorRT engine plan to be generated (from your model file) or where your pre-generated engine plan file is located E.g. |

|

|

|

If set to true, the node will always try to generate a TensorRT engine plan from your model file and needs to be set to false to use the pre-generated TensorRT engine plan |

|

|

|

A list of tensor names to be bound to specified input bindings names. Bindings occur in sequential order, so the first name here will be mapped to the first name in input_binding_names |

|

|

|

A list of input tensor binding names specified by model E.g. |

|

|

|

A list of input tensor NITROS formats. This should be given in sequential order E.g. |

|

|

|

A list of tensor names to be bound to specified output binding names |

|

|

|

A list of tensor names to be bound to specified output binding names E.g. |

|

|

|

A list of input tensor NITROS formats. This should be given in sequential order E.g. |

|

|

|

If set to true, the node will enable verbose logging to console from the internal TensorRT execution |

|

|

|

The size of the working space in bytes |

|

|

|

The maximum possible batch size in case the first dimension is dynamic and used as the batch size |

|

|

|

The DLA Core to use. Fallback to GPU is always enabled. The default setting is GPU only |

|

|

|

Enables building a TensorRT engine plan file which uses FP16 precision for inference. If this setting is false, the plan file will use FP32 precision |

|

|

|

Ignores dimensions of 1 for the input-tensor dimension check |

|

|

|

The number of pre-allocated memory output blocks, should not be less than |

ROS Topics Subscribed

ROS Topic |

Type |

Description |

|---|---|---|

|

The input tensor stream |

ROS Topics Published

ROS Topic |

Type |

Description |

|---|---|---|

|

The tensor list of output tensors from the model inference |