Attention

As of June 30, 2025, the Isaac ROS Buildfarm for Isaac ROS 2.1 on Ubuntu 20.04 Focal is no longer supported.

Due to an isolated infrastructure event, all ROS 2 Humble Debian packages that were previously built for Ubuntu 20.04 are no longer available in the Isaac Apt Repository. All artifacts for Isaac ROS 3.0 and later are built and maintained with a more robust pipeline.

Users are encouraged to migrate to the latest version of Isaac ROS. The source code for Isaac ROS 2.1

continues to be available on the release-2.1 branches of the Isaac ROS

GitHub repositories.

The original documentation for Isaac ROS 2.1 is preserved below.

Tutorial for Visual SLAM Using a RealSense Camera with Integrated IMU

Overview

This tutorial walks you through setting up Isaac ROS Visual SLAM with a Realsense camera.

Note

The launch file

provided in this tutorial is designed for a RealSense camera with

integrated IMU. If you want to run this tutorial with a RealSense

camera without an IMU (like RealSense D435), then change the

enable_imu_fusion parameter in the launch file to False.

Note

This tutorial requires a compatible RealSense camera from the list of available cameras.

Tutorial Walkthrough - VSLAM Execution

Complete the RealSense setup tutorial.

Complete the quickstart here.

Follow the IMU page to obtain the IMU Noise Model parameters. Parameters can be obtained through the datasheet for the IMU or from a ROS package such as this.

[Terminal 1] Run

realsense-cameranode andvisual_slamnode.Make sure you have your RealSense camera attached to the system, and then start the Isaac ROS container.

isaac_ros_container

Or if you did not add the command in step 1-3 of the quickstart section:

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh ${ISAAC_ROS_WS}

[Terminal 1] Inside the container, build and source the workspace:

cd /workspaces/isaac_ros-dev && \ colcon build --symlink-install && \ source install/setup.bash

[Terminal 1] Run the launch file, which launches the example and waits for 5 seconds:

ros2 launch isaac_ros_visual_slam isaac_ros_visual_slam_realsense.launch.py

[Terminal 2] Attach a second terminal to check the operation.

Attach another terminal to the running container for issuing other ROS2 commands.

isaac_ros_container

Verify that you can see all the ROS2 topics expected.

ros2 topic list

Output example:

/camera/accel/imu_info /camera/accel/metadata /camera/accel/sample /camera/extrinsics/depth_to_accel /camera/extrinsics/depth_to_gyro /camera/extrinsics/depth_to_infra1 /camera/extrinsics/depth_to_infra2 /camera/gyro/imu_info /camera/gyro/metadata /camera/gyro/sample /camera/imu /camera/infra1/camera_info /camera/infra1/image_rect_raw /camera/infra1/image_rect_raw/compressed /camera/infra1/image_rect_raw/compressedDepth /camera/infra1/image_rect_raw/theora /camera/infra1/metadata /camera/infra2/camera_info /camera/infra2/image_rect_raw /camera/infra2/image_rect_raw/compressed /camera/infra2/image_rect_raw/compressedDepth /camera/infra2/image_rect_raw/theora /camera/infra2/metadata /parameter_events /rosout /tf /tf_static /visual_slam/imu /visual_slam/status /visual_slam/tracking/odometry /visual_slam/tracking/slam_path /visual_slam/tracking/vo_path /visual_slam/tracking/vo_pose /visual_slam/tracking/vo_pose_covariance /visual_slam/vis/gravity /visual_slam/vis/landmarks_cloud /visual_slam/vis/localizer /visual_slam/vis/localizer_loop_closure_cloud /visual_slam/vis/localizer_map_cloud /visual_slam/vis/localizer_observations_cloud /visual_slam/vis/loop_closure_cloud /visual_slam/vis/observations_cloud /visual_slam/vis/pose_graph_edges /visual_slam/vis/pose_graph_edges2 /visual_slam/vis/pose_graph_nodes /visual_slam/vis/velocity

Check the frequency of the

realsense-cameranode’s output frequency.ros2 topic hz /camera/infra1/image_rect_raw --window 20

Example output:

average rate: 89.714 min: 0.011s max: 0.011s std dev: 0.00025s window: 20 average rate: 90.139 min: 0.010s max: 0.012s std dev: 0.00038s window: 20 average rate: 89.955 min: 0.011s max: 0.011s std dev: 0.00020s window: 20 average rate: 89.761 min: 0.009s max: 0.013s std dev: 0.00074s window: 20

Ctrl+cto stop the output.You can also check the frequency of IMU topic.

ros2 topic hz /camera/imu --window 20

Example output:

average rate: 199.411 min: 0.004s max: 0.006s std dev: 0.00022s window: 20 average rate: 199.312 min: 0.004s max: 0.006s std dev: 0.00053s window: 20 average rate: 200.409 min: 0.005s max: 0.005s std dev: 0.00007s window: 20 average rate: 200.173 min: 0.004s max: 0.006s std dev: 0.00028s window: 20

Verify that you are getting the output from the

visual_slamnode at the same rate as the input.ros2 topic hz /visual_slam/tracking/odometry --window 20

Example output:

average rate: 58.086 min: 0.002s max: 0.107s std dev: 0.03099s window: 20 average rate: 62.370 min: 0.001s max: 0.109s std dev: 0.03158s window: 20 average rate: 90.559 min: 0.009s max: 0.013s std dev: 0.00066s window: 20 average rate: 85.612 min: 0.002s max: 0.100s std dev: 0.02079s window: 20 average rate: 90.032 min: 0.010s max: 0.013s std dev: 0.00059s window: 20

Tutorial Walkthrough - Visualization

You have two options for checking the visual_slam

output:

Live visualization: Run RViz2 live while running

realsense-cameranode andvisual_slamnodes.Offline visualization: Record rosbag file and check the recorded data offline (possibly on a different machine).

Running RViz2 on a remote PC over the network can be challenging and can be

difficult especially when you have image message topics to subscribe because

of the added burden on the ROS 2 network transport.

Working on RViz2 in a X11-forwarded window can also be difficult because of the network speed limitation.

Typically, if you are running visual_slam on Jetson, it is generally

recommended that you NOT evaluate with live visualization (1).

Live Visualization

Offline Visualization

[Terminal 2] Save a rosbag file.

Record the output in your rosbag file, along with the input data for later visual inspection.

export ROSBAG_NAME=courtyard-d435i ros2 bag record -o ${ROSBAG_NAME} \ /camera/imu /camera/accel/metadata /camera/gyro/metadata \ /camera/infra1/camera_info /camera/infra1/image_rect_raw \ /camera/infra1/metadata \ /camera/infra2/camera_info /camera/infra2/image_rect_raw \ /camera/infra2/metadata \ /tf_static /tf \ /visual_slam/status \ /visual_slam/tracking/odometry \ /visual_slam/tracking/slam_path /visual_slam/tracking/vo_path \ /visual_slam/tracking/vo_pose /visual_slam/tracking/vo_pose_covariance \ /visual_slam/vis/landmarks_cloud /visual_slam/vis/loop_closure_cloud \ /visual_slam/vis/observations_cloud \ /visual_slam/vis/pose_graph_edges /visual_slam/vis/pose_graph_edges2 \ /visual_slam/vis/pose_graph_nodes ros2 bag info ${ROSBAG_NAME}

If you plan to run the rosbag on a remote machine (PC) for evaluation, you can send the rosbag file to your remote machine.

export IP_PC=192.168.1.100 scp -r ${ROSBAG_NAME} ${PC_USER}@${IP_PC}:/home/${PC_USER}/workspaces/isaac_ros-dev/

[Terminal 1] Launch RViz2.

If you are SSHing into Jetson from your PC, make sure you enabled X forwarding by adding

-Xoption with SSH command:ssh -X ${USERNAME_ON_JETSON}@${IP_JETSON}

Launch the Isaac ROS container:

isaac_ros_container

Run RViz with a configuration file for visualizing a set of messages from Visual SLAM node.

cd /workspaces/isaac_ros-dev rviz2 -d src/isaac_ros_visual_slam/isaac_ros_visual_slam/rviz/vslam_keepall.cfg.rviz

[Terminal 2] Playback the recorded rosbag.

Attach another terminal to the running container.

isaac_ros_container

Play the recorded rosbag file.

ros2 bag play ${ROSBAG_NAME}

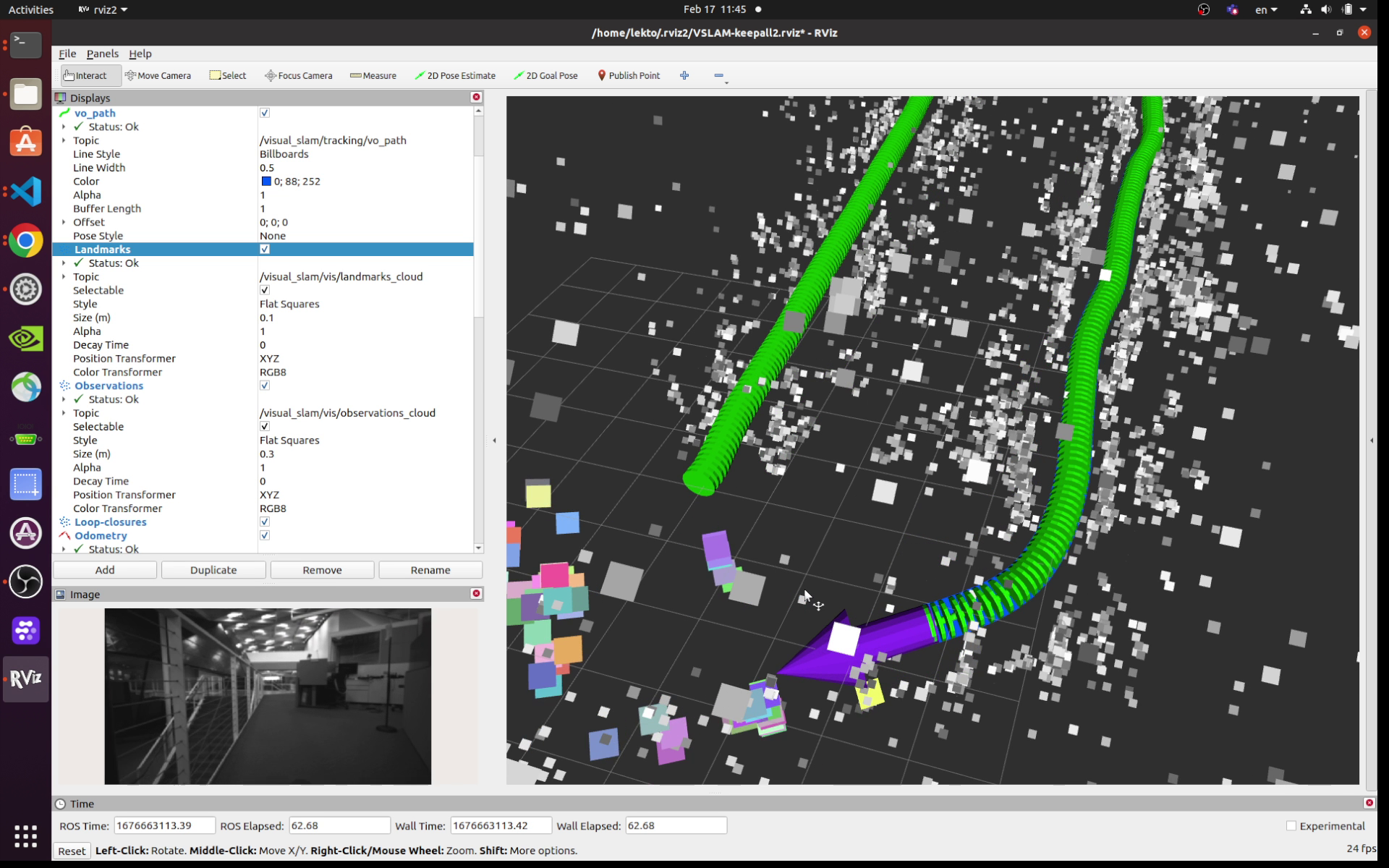

RViz starts showing a visualization similar to the following: