Attention

As of June 30, 2025, the Isaac ROS Buildfarm for Isaac ROS 2.1 on Ubuntu 20.04 Focal is no longer supported.

Due to an isolated infrastructure event, all ROS 2 Humble Debian packages that were previously built for Ubuntu 20.04 are no longer available in the Isaac Apt Repository. All artifacts for Isaac ROS 3.0 and later are built and maintained with a more robust pipeline.

Users are encouraged to migrate to the latest version of Isaac ROS. The source code for Isaac ROS 2.1

continues to be available on the release-2.1 branches of the Isaac ROS

GitHub repositories.

The original documentation for Isaac ROS 2.1 is preserved below.

isaac_ros_centerpose

Source code on GitHub.

Quickstart

Complete steps 1-4 of the quickstart here

Install this package’s dependencies.

sudo apt-get install -y ros-humble-isaac-ros-centerpose ros-humble-isaac-ros-triton ros-humble-isaac-ros-dnn-image-encoder

Create a models repository with version

1:mkdir -p /tmp/models/centerpose_shoe/1

Copy the test model located in

isaac_ros_centerposeinto/tmp/models/centerpose_shoe/1. Make sure it’s calledmodel.onnx:cp /workspaces/isaac_ros-dev/src/isaac_ros_pose_estimation/isaac_ros_centerpose/test/models/centerpose_shoe.onnx /tmp/models/centerpose_shoe/1/model.onnx

Create a configuration file for this model at path

/tmp/models/centerpose_shoe/config.pbtxt. Note that name has to be the same as the model repository name. Take a look at the example atisaac_ros_centerpose/test/models/centerpose_shoe/config.pbtxtand copy that file to/tmp/models/centerpose_shoe/config.pbtxt.cp /workspaces/isaac_ros-dev/src/isaac_ros_pose_estimation/isaac_ros_centerpose/test/models/centerpose_shoe/config.pbtxt /tmp/models/centerpose_shoe/config.pbtxt

To get a TensorRT engine plan file with Triton, export the ONNX model into an TensorRT engine plan file using the builtin TensorRT converter

trtexec:/usr/src/tensorrt/bin/trtexec --onnx=/tmp/models/centerpose_shoe/1/model.onnx --saveEngine=/tmp/models/centerpose_shoe/1/model.plan

Start

isaac_ros_centerposeusing the launch file:ros2 launch isaac_ros_centerpose isaac_ros_centerpose_triton.launch.py model_name:=centerpose_shoe model_repository_paths:=['/tmp/models']

Then open another terminal, and enter the Docker container again:

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Then, play the ROS bag:

ros2 bag play -l src/isaac_ros_pose_estimation/resources/rosbags/centerpose_rosbag/

Open another terminal window and attach to the same container. You should be able to get the poses of the objects in the images through

ros2 topic echo:In a third terminal, enter the Docker container again:

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

ros2 topic echo /centerpose/detections

Then launch

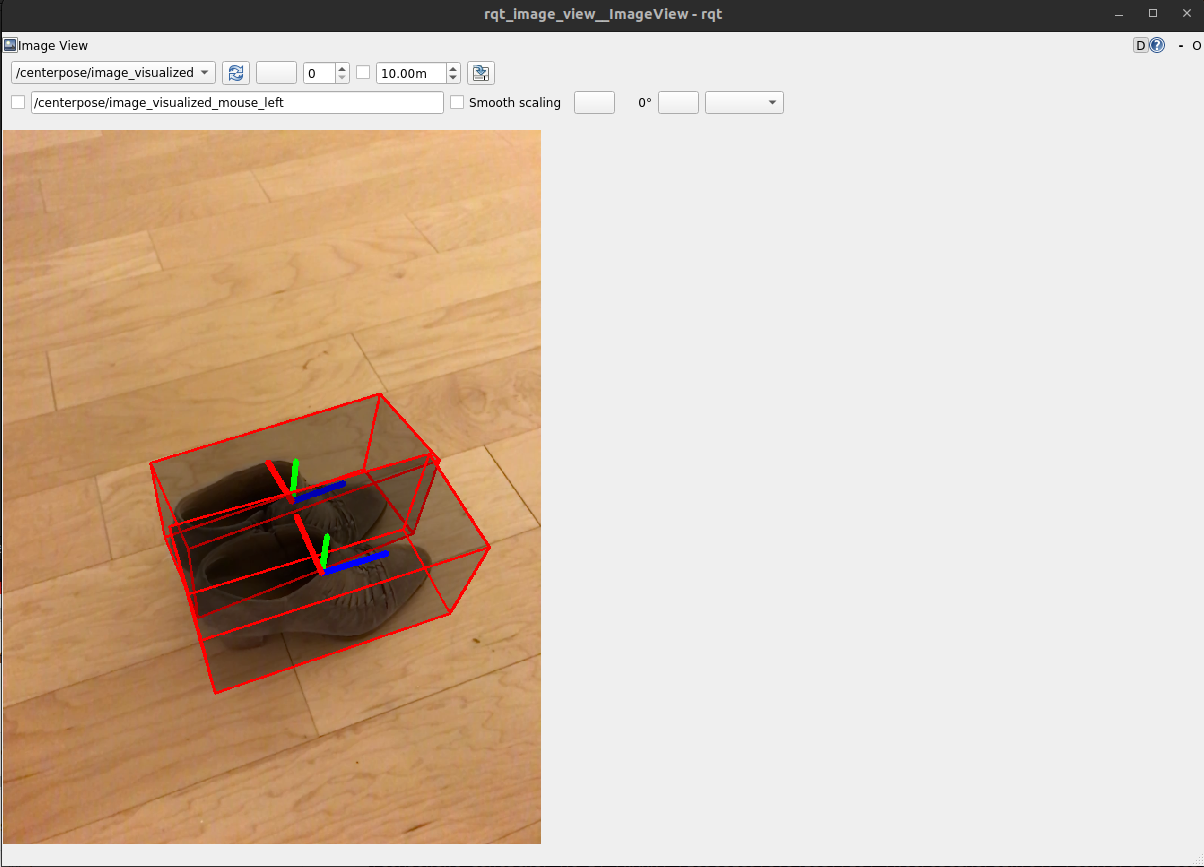

rqt_image_view:ros2 run rqt_image_view rqt_image_view

Then inside the

rqt_image_viewGUI, change the topic to/centerpose/image_visualizedto view the detections projected back onto the input image.

Note

The launch file launches a visualization node for visualization purposes.

There also is an analogous launch file called isaac_ros_centerpose_tensor_rt.launch.py

that can be used if using TensorRT is desired instead of Triton.

Warning

CenterPose only produces poses up to a scale. There are a few ways to

obtain the scale factor. The original paper simply measured the height of each object.

This scale factor can be set using the cuboid_scaling_factor.

Use Different Models

NGC has CenterPose class models that can detect other objects. Check them out here.

Troubleshooting

Isaac ROS Troubleshooting

For solutions to problems with Isaac ROS, please check here.

Deep Learning Troubleshooting

For solutions to problems with using DNN models, please check here.

API

Usage

Two launch files are provided to use this package. The first launch file launches isaac_ros_tensor_rt, whereas the other one uses isaac_ros_triton, along with

the necessary components to perform encoding on images and decoding of the CenterPose network’s output.

Warning

For your specific application, these launch files may need to be modified. Please consult the available components to see the configurable parameters.

Launch File |

Components Used |

|---|---|

|

DnnImageEncoderNode, TensorRTNode, CenterPoseDecoderNode, CenterPoseVisualizerNode |

|

DnnImageEncoderNode, TritonNode, CenterPoseDecoderNode, CenterPoseVisualizerNode |

CenterPoseDecoderNode

ROS Parameters

ROS Parameter |

Type |

Default |

Description |

|---|---|---|---|

|

|

|

An array of two integers that represent the size of the 2D keypoint decoding from the network output |

|

|

|

This parameter is used to scale the cuboid used for calculating the size of the objects detected. |

|

|

|

The threshold for scores values to discard. Any score less than this value will be discarded. |

|

|

|

The name of the category instance / object detected. |

ROS Topics Subscribed

ROS Topic |

Interface |

Description |

|---|---|---|

|

The TensorList that contains the outputs of the CenterPose network. |

|

|

The CameraInfo of the original, undistorted image. |

ROS Topics Published

ROS Topic |

Interface |

Description |

|---|---|---|

|

A |

CenterPoseVisualizerNode

ROS Parameters

ROS Parameter |

Type |

Default |

Description |

|---|---|---|---|

|

|

|

Whether to draw the axes of the detected pose or not. |

|

|

|

The color of the bounding box drawn. Only the last 24 bits are used to draw the color. |

ROS Topics Subscribed

ROS Topic |

Interface |

Description |

|---|---|---|

|

The original, undistorted image. |

|

|

The CameraInfo of the original, undistorted image. |

|

|

The detections made the CenterPose decoder node. |

ROS Topics Published

ROS Topic |

Interface |

Description |

|---|---|---|

|

An image containing the detection’s bounding box reprojected onto the image and, optionally, the axes of the detected objects. |