Attention

As of June 30, 2025, the Isaac ROS Buildfarm for Isaac ROS 2.1 on Ubuntu 20.04 Focal is no longer supported.

Due to an isolated infrastructure event, all ROS 2 Humble Debian packages that were previously built for Ubuntu 20.04 are no longer available in the Isaac Apt Repository. All artifacts for Isaac ROS 3.0 and later are built and maintained with a more robust pipeline.

Users are encouraged to migrate to the latest version of Isaac ROS. The source code for Isaac ROS 2.1

continues to be available on the release-2.1 branches of the Isaac ROS

GitHub repositories.

The original documentation for Isaac ROS 2.1 is preserved below.

Tutorial for DOPE Inference with Triton

Overview

This tutorial walks you through a graph to estimate the 6DOF pose of a target object using DOPE using different backends. It uses input monocular images from a rosbag. The different backends show are:

PyTorch and ONNX

TensorRT Plan files with Triton

PyTorch model with Triton

Note

The DOPE converter script only works on x86_64, so the

resultant onnx model following these steps must be copied to the

Jetson.

Tutorial Walkthrough

Complete steps 1-6 of the quickstart here.

Make a directory called

Ketchupinside/tmp/models, which will serve as the model repository. This will be versioned as1. The downloaded model will be placed here:mkdir -p /tmp/models/Ketchup/1 && \ mv /tmp/models/Ketchup.pth /tmp/models/Ketchup/

Now select a backend. The PyTorch and ONNX options MUST be run on

x86_64:To run ONNX models with Triton, export the model into an ONNX file using the script provided under

/workspaces/isaac_ros-dev/src/isaac_ros_pose_estimation/isaac_ros_dope/scripts/dope_converter.py:python3 /workspaces/isaac_ros-dev/src/isaac_ros_pose_estimation/isaac_ros_dope/scripts/dope_converter.py --format onnx --input /tmp/models/Ketchup/Ketchup.pth --output /tmp/models/Ketchup/1/model.onnx --input_name INPUT__0 --output_name OUTPUT__0

To run

TensorRT Planfiles with Triton, first copy the generatedonnxmodel from the above point to the target platform (e.g. a Jetson or anx86_64machine). The model will be assumed to be copied to/tmp/models/Ketchup/1/model.onnxinside the Docker container. Then usetrtexecto convert theonnxmodel to aplanmodel:/usr/src/tensorrt/bin/trtexec --onnx=/tmp/models/Ketchup/1/model.onnx --saveEngine=/tmp/models/Ketchup/1/model.plan

To run PyTorch model with Triton (inferencing PyTorch model is supported for x86_64 platform only), the model needs to be saved using

torch.jit.save(). The downloaded DOPE model is saved withtorch.save(). Export the DOPE model using the script provided under/workspaces/isaac_ros-dev/src/isaac_ros_pose_estimation/isaac_ros_dope/scripts/dope_converter.py:python3 /workspaces/isaac_ros-dev/src/isaac_ros_pose_estimation/isaac_ros_dope/scripts/dope_converter.py --format pytorch --input /tmp/models/Ketchup/Ketchup.pth --output /tmp/models/Ketchup/1/model.pt

Create a configuration file for this model at path

/tmp/models/Ketchup/config.pbtxt. Note that name has to be the same as the model repository. Depending on the platform selected from a previous step, a slightly differentconfig.pbtxtfile must be created:onnxruntime_onnx(.onnxfile),tensorrt_plan(.planfile) orpytorch_libtorch(.ptfile):name: "Ketchup" platform: <insert-platform> max_batch_size: 0 input [ { name: "INPUT__0" data_type: TYPE_FP32 dims: [ 1, 3, 480, 640 ] } ] output [ { name: "OUTPUT__0" data_type: TYPE_FP32 dims: [ 1, 25, 60, 80 ] } ] version_policy: { specific { versions: [ 1 ] } }

The

<insert-platform>part should be replaced withonnxruntime_onnxfor.onnxfiles,tensorrt_planfor.planfiles andpytorch_libtorchfor.ptfiles.Note

The DOPE decoder currently works with the output of a DOPE network that has a fixed input size of 640 x 480, which are the default dimensions set in the script. In order to use input images of other sizes, make sure to crop or resize using ROS 2 nodes from Isaac ROS Image Pipeline or similar packages. If another image resolution is desired, please see here.

Note

- The model name must be

model.<selected-platform-extension>.

Start

isaac_ros_dopeusing the launch file:ros2 launch isaac_ros_dope isaac_ros_dope_triton.launch.py model_name:=Ketchup model_repository_paths:=['/tmp/models'] input_binding_names:=['INPUT__0'] output_binding_names:=['OUTPUT__0'] object_name:=Ketchup

Note

object_nameshould correspond to one of the objects listed in the DOPE configuration file, and the specified model should be a DOPE model that is trained for that specific object.Open another terminal, and enter the Docker container again:

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Then, play the ROS bag:

ros2 bag play -l src/isaac_ros_pose_estimation/resources/rosbags/dope_rosbag/

Open another terminal window and attach to the same container. You should be able to get the poses of the objects in the images through

ros2 topic echo:In a third terminal, enter the Docker container again:

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

ros2 topic echo /poses

Note

We are echoing

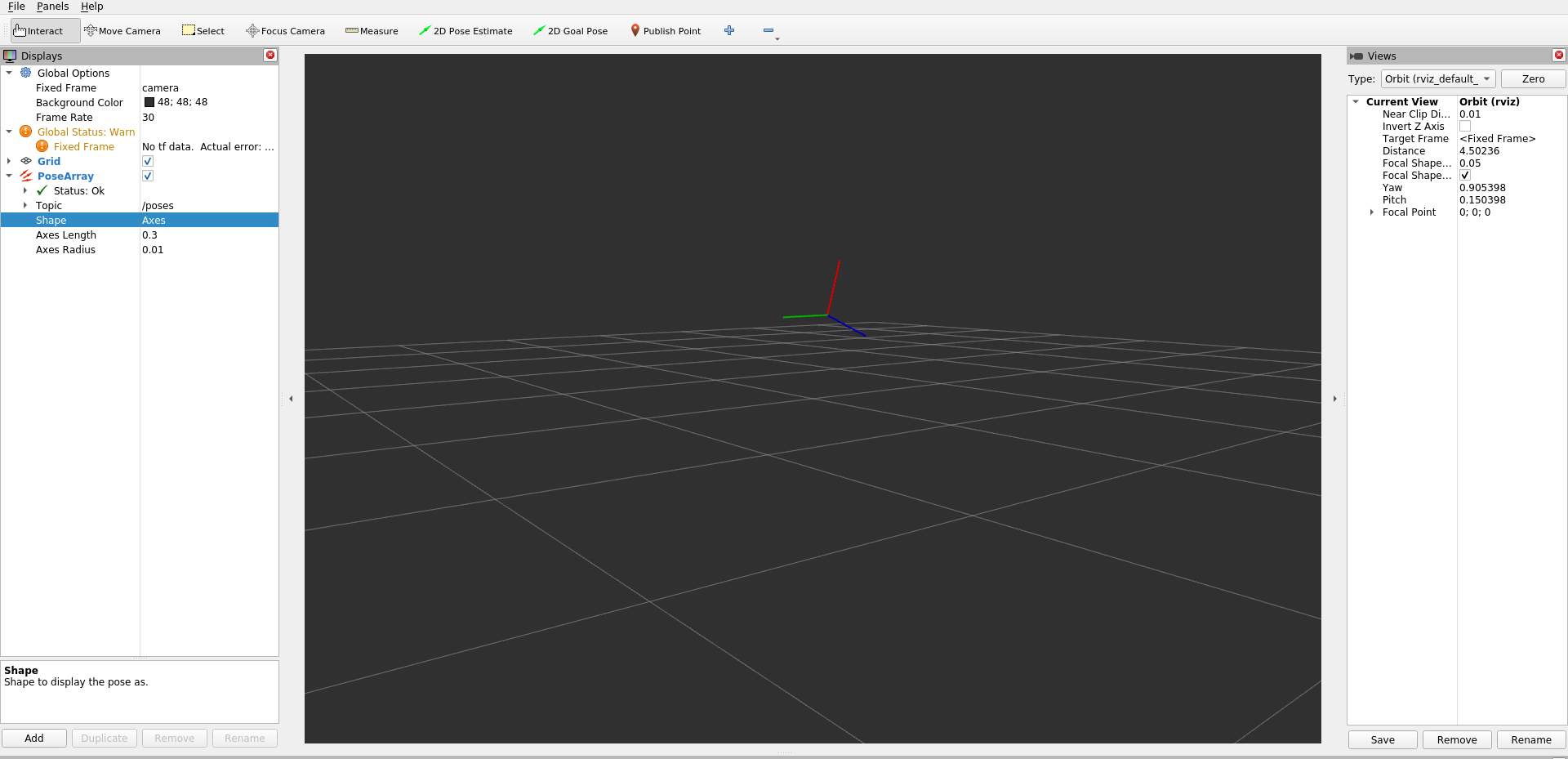

/posesbecause we remapped the original topic/dope/pose_arraytoposesin the launch file.Now visualize the pose array in RViz2:

rviz2

Then click on the

Addbutton, selectBy topicand choosePoseArrayunder/poses. Finally, change the display to show an axes by updatingShapeto beAxes, as shown in the screenshot at the top of this page. Make sure to update theFixed Frametotf_camera.

Note

For best results, crop/resize input images to the same dimensions your DNN model is expecting.