Attention

As of June 30, 2025, the Isaac ROS Buildfarm for Isaac ROS 2.1 on Ubuntu 20.04 Focal is no longer supported.

Due to an isolated infrastructure event, all ROS 2 Humble Debian packages that were previously built for Ubuntu 20.04 are no longer available in the Isaac Apt Repository. All artifacts for Isaac ROS 3.0 and later are built and maintained with a more robust pipeline.

Users are encouraged to migrate to the latest version of Isaac ROS. The source code for Isaac ROS 2.1

continues to be available on the release-2.1 branches of the Isaac ROS

GitHub repositories.

The original documentation for Isaac ROS 2.1 is preserved below.

isaac_ros_unet

Source code on GitHub.

Quickstart

Set up your development environment by following the instructions here.

Clone

isaac_ros_commonand this repository under${ISAAC_ROS_WS}/src.cd ${ISAAC_ROS_WS}/src

git clone https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_common.git

git clone https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_image_segmentation.git

Pull down a ROS Bag of sample data:

cd ${ISAAC_ROS_WS}/src/isaac_ros_image_segmentation && \ git lfs pull -X "" -I "resources/rosbags/"

Launch the Docker container using the

run_dev.shscript:cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Install this package’s dependencies.

sudo apt-get install -y ros-humble-isaac-ros-unet ros-humble-isaac-ros-triton ros-humble-isaac-ros-dnn-image-encoder

Download the

PeopleSemSegNet ShuffleSegETLT file and theint8inference mode cache file:mkdir -p /tmp/models/peoplesemsegnet_shuffleseg/1 && \ cd /tmp/models/peoplesemsegnet_shuffleseg && \ wget https://api.ngc.nvidia.com/v2/models/nvidia/tao/peoplesemsegnet/versions/deployable_shuffleseg_unet_v1.0/files/peoplesemsegnet_shuffleseg_etlt.etlt && \ wget https://api.ngc.nvidia.com/v2/models/nvidia/tao/peoplesemsegnet/versions/deployable_shuffleseg_unet_v1.0/files/peoplesemsegnet_shuffleseg_cache.txt

Convert the ETLT file to a TensorRT plan file:

/opt/nvidia/tao/tao-converter -k tlt_encode -d 3,544,960 -p input_2:0,1x3x544x960,1x3x544x960,1x3x544x960 -t int8 -c peoplesemsegnet_shuffleseg_cache.txt -e /tmp/models/peoplesemsegnet_shuffleseg/1/model.plan -o argmax_1 peoplesemsegnet_shuffleseg_etlt.etlt

Create a file called

/tmp/models/peoplesemsegnet_shuffleseg/config.pbtxtby copying the sample Triton config file:cp /workspaces/isaac_ros-dev/src/isaac_ros_image_segmentation/resources/peoplesemsegnet_shuffleseg_config.pbtxt /tmp/models/peoplesemsegnet_shuffleseg/config.pbtxt

Run the following launch files to spin up a demo of this package:

ros2 launch isaac_ros_unet isaac_ros_unet_triton.launch.py model_name:=peoplesemsegnet_shuffleseg model_repository_paths:=['/tmp/models'] input_binding_names:=['input_2:0'] output_binding_names:=['argmax_1'] network_output_type:='argmax' input_image_width:=1200 input_image_height:=632

Then open another terminal, and enter the Docker container again:

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Then, play the ROS bag:

ros2 bag play -l src/isaac_ros_image_segmentation/resources/rosbags/unet_sample_data/

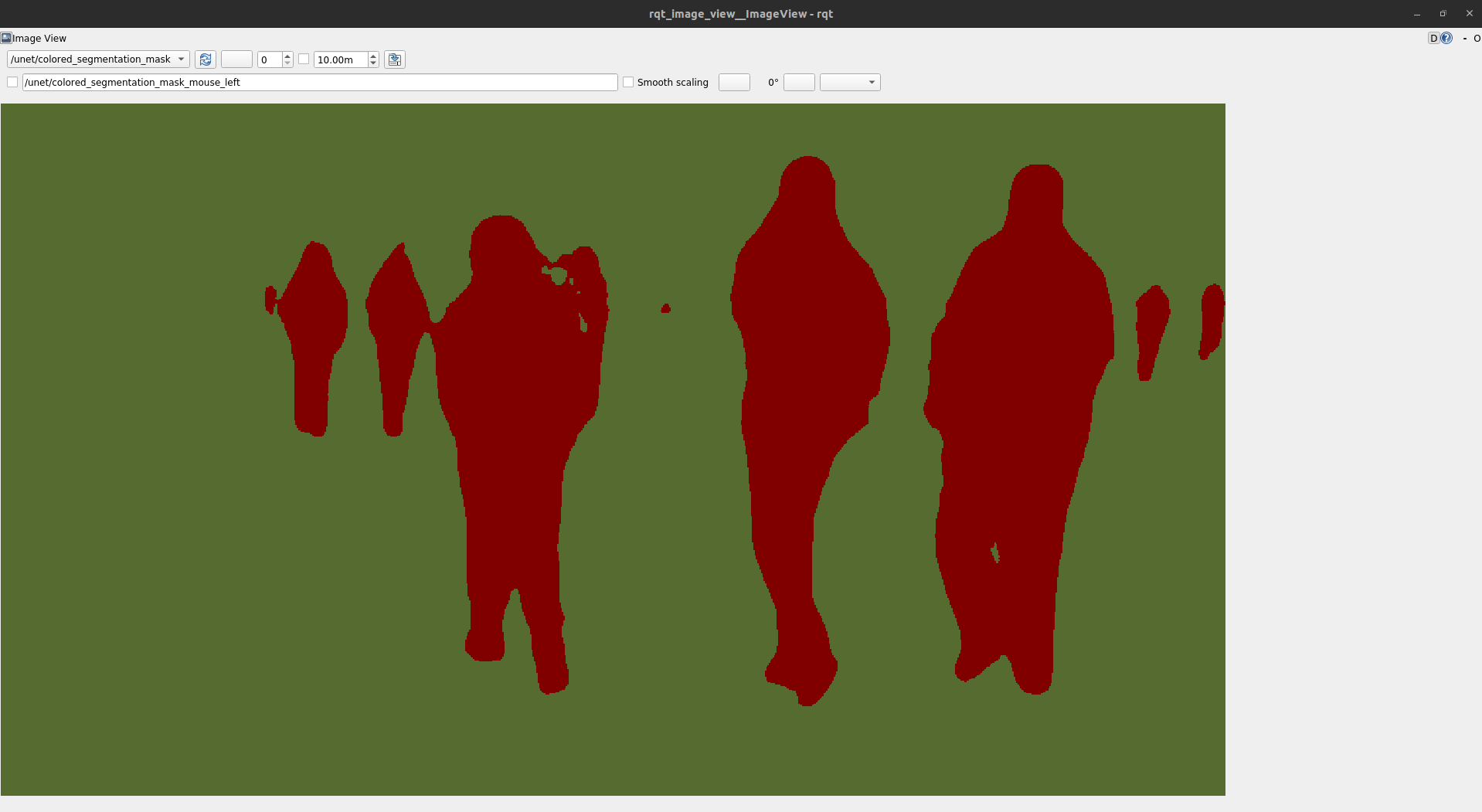

Visualize and validate the output of the package by launching

rqt_image_viewin another terminal: In a third terminal, enter the Docker container again:cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Then launch

rqt_image_view:ros2 run rqt_image_view rqt_image_view

Then inside the

rqt_image_viewGUI, change the topic to/unet/colored_segmentation_maskto view a colorized segmentation mask.

Try More Examples

To continue your exploration, check out the following suggested examples:

Troubleshooting

Isaac ROS Troubleshooting

For solutions to problems with Isaac ROS, please check here.

Deep Learning Troubleshooting

For solutions to problems with using DNN models, please check here.

API

Usage

Three launch files are provided to use this package. The first launch file launches isaac_ros_tensor_rt, whereas another one uses isaac_ros_triton, along with

the necessary components to perform encoding on images and decoding of U-Net’s output. The final launch file launches an Argus-compatible camera

with a rectification node, along with the components found in isaac_ros_unet_triton.launch.py.

A more comprehensive tutorial featuring the third launch file can be found here.

Warning

For your specific application, these launch files may need to be modified. Please consult the available components to see the configurable parameters.

Launch File |

Components Used |

|---|---|

|

|

|

|

|

ArgusMonoNode, RectifyNode, DnnImageEncoderNode, TritonNode, UNetDecoderNode |

UNetDecoderNode

ROS Parameters

ROS Parameter |

Type |

Default |

Description |

|---|---|---|---|

|

|

|

The image encoding of the colored segmentation mask. Supported values: |

|

|

|

Vector of integers where each element represents the RGB color hex code for the corresponding class label. The number of elements should equal the number of classes. E.g. |

|

|

|

The type of output that the network provides. Supported values: |

|

|

|

The width of the segmentation mask. |

|

|

|

The height of the segmentation mask. |

Warning

Note: the model output should be

NCHWorNHWC. In this context, theCrefers to the class.Note: For the

network_output_type, thesoftmaxandsigmoidoption expects a single 32 bit floating point tensor. For theargmaxoption, a single signed 32 bit integer tensor is expected.Note: Models with greater than 255 classes are currently not supported. If a class label greater than 255 is detected, this mask will be downcast to 255 in the raw segmentation.

ROS Topic |

Interface |

Description |

|---|---|---|

|

The tensor that contains raw probabilities for every class in each pixel. |

Warning

All input images are required to have height and width that are both an even number of pixels.

ROS Topics Published

ROS Topic |

Interface |

Description |

|---|---|---|

|

The raw segmentation mask, encoded in mono8. Each pixel represents a class label. |

|

|

The colored segmentation mask. The color palette is user specified by the |