Attention

As of June 30, 2025, the Isaac ROS Buildfarm for Isaac ROS 2.1 on Ubuntu 20.04 Focal is no longer supported.

Due to an isolated infrastructure event, all ROS 2 Humble Debian packages that were previously built for Ubuntu 20.04 are no longer available in the Isaac Apt Repository. All artifacts for Isaac ROS 3.0 and later are built and maintained with a more robust pipeline.

Users are encouraged to migrate to the latest version of Isaac ROS. The source code for Isaac ROS 2.1

continues to be available on the release-2.1 branches of the Isaac ROS

GitHub repositories.

The original documentation for Isaac ROS 2.1 is preserved below.

isaac_ros_detectnet

Source code on GitHub.

Quickstart

Set up your development environment by following the instructions here.

Clone

isaac_ros_commonand this repository under${ISAAC_ROS_WS}/src.cd ${ISAAC_ROS_WS}/src

git clone https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_common.git

git clone https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_object_detection.git

Pull down a ROS Bag of sample data:

cd ${ISAAC_ROS_WS}/src/isaac_ros_object_detection/isaac_ros_detectnet && \ git lfs pull -X "" -I "resources/rosbags"

Launch the Docker container using the

run_dev.shscript:cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Install this package’s dependencies.

sudo apt-get install -y ros-humble-isaac-ros-detectnet ros-humble-isaac-ros-triton ros-humble-isaac-ros-dnn-image-encoder

Run the quickstart setup script which will download the PeopleNet Model from NVIDIA GPU Cloud(NGC)

cd /workspaces/isaac_ros-dev/src/isaac_ros_object_detection/isaac_ros_detectnet && \ ./scripts/setup_model.sh --height 632 --width 1200 --config-file resources/quickstart_config.pbtxt

Run the following launch file to spin up a demo of this package:

cd /workspaces/isaac_ros-dev && \ ros2 launch isaac_ros_detectnet isaac_ros_detectnet_quickstart.launch.py

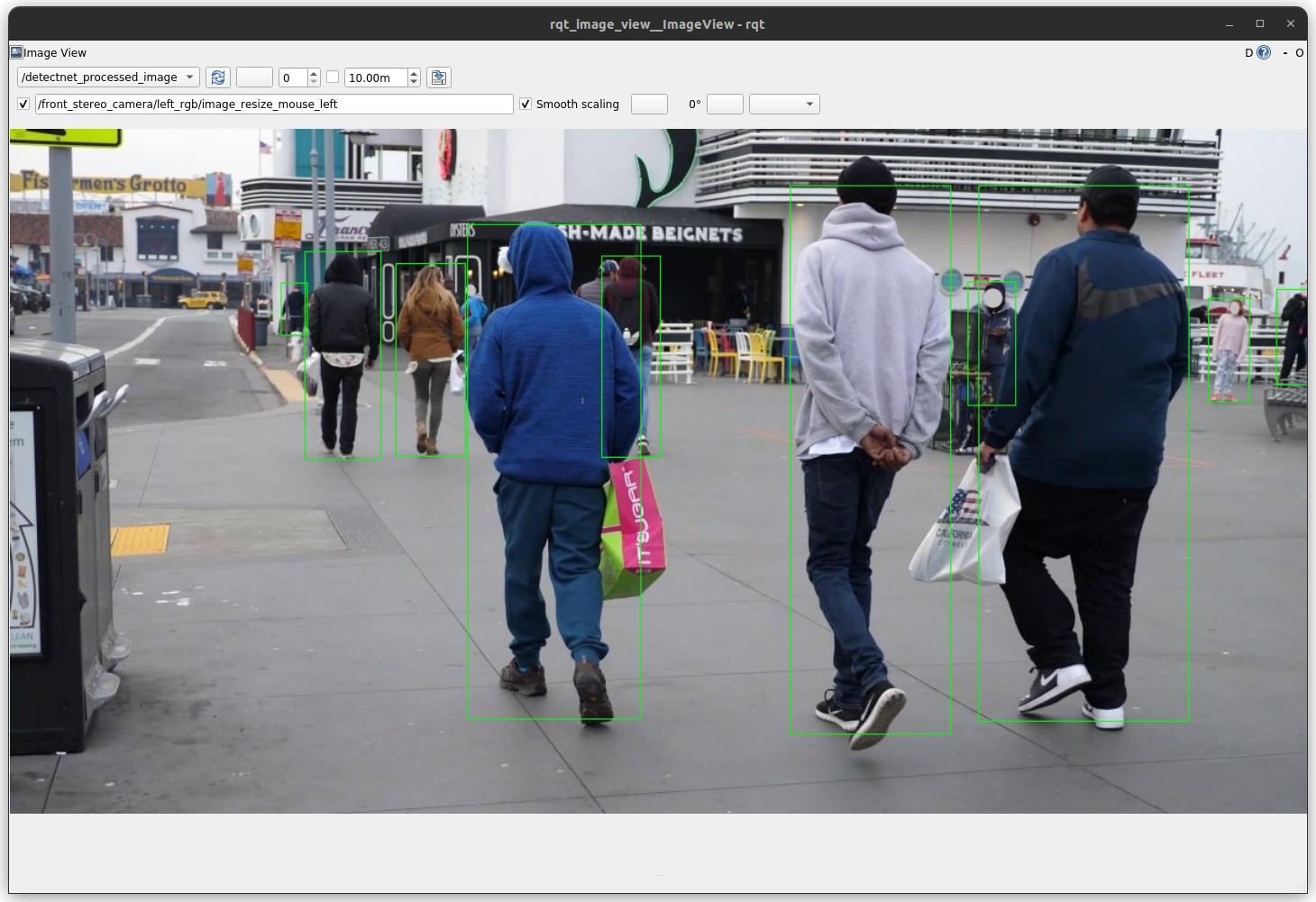

Visualize and validate the output of the package in the

rqt_image_viewwindow. After about a minute, your output should look like this:

Try More Examples

To continue your exploration, check out the following suggested examples:

This package only supports models based on the Detectnet_v2 architecture. Some of the supported DetectNet models from NGC:

Model Name |

Use Case |

|---|---|

People counting with a mobile robot |

|

People counting, heatmap generation, social distancing |

|

Detect and track cars |

|

Identify objects from a moving object |

|

Detect faces in a dark environment with IR camera |

To learn how to use this models, click here.

ROS 2 Graph Configuration

To run the DetectNet object detection inference, the following ROS 2 nodes must be set up and running:

Isaac ROS DNN Image encoder: This takes an image message and converts it to a tensor (

TensorListisaac_ros_tensor_list_interfaces/TensorList) that can be processed by the network.Isaac ROS DNN Inference - Triton: This executes the DetectNet network and takes, as input, the tensor from the DNN Image Encoder.

Note

The Isaac ROS TensorRT package is not able to perform inference with DetectNet models at this time.

The output is a TensorList message containing the encoded detections. Use the parameters

model_nameandmodel_repository_pathsto point to the model folder and set the model name. The.planfile should be located at$model_repository_path/$model_name/1/model.planIsaac ROS Detectnet Decoder: This node takes the TensorList with encoded detections as input, and outputs

Detection2DArraymessages for each frame. See the following section for the parameters.

Troubleshooting

Isaac ROS Troubleshooting

For solutions to problems with Isaac ROS, see troubleshooting.

Deep Learning Troubleshooting

For solutions to problems with using DNN models, see troubleshooting deeplearning.

API

Usage

ros2 launch isaac_ros_detectnet isaac_ros_detectnet.launch.py label_list:=<list of labels> enable_confidence_threshold:=<enable confidence thresholding> enable_bbox_area_threshold:=<enable bbox size thresholding> enable_dbscan_clustering:=<enable dbscan clustering> confidence_threshold:=<minimum confidence value> min_bbox_area:=<minimum bbox area value> dbscan_confidence_threshold:=<minimum confidence for dbscan algorithm> dbscan_eps:=<epsilon distance> dbscan_min_boxes:=<minimum returned boxes> dbscan_enable_athr_filter:=<area-to-hit-ratio filter> dbscan_threshold_athr:=<area-to-hit ratio threshold> dbscan_clustering_algorithm:=<choice of clustering algorithm> bounding_box_scale:=<bounding box normalization value> bounding_box_offset:=<XY offset for bounding box>

ROS Parameters

ROS Parameter |

Type |

Default |

Description |

|---|---|---|---|

|

|

|

The list of labels. These are loaded from |

|

|

|

The min value of confidence used to threshold detections before clustering |

|

|

|

The min value of bounding box area used to threshold detections before clustering |

|

|

|

Holds the epsilon to control merging of overlapping boxes. Refer to OpenCV |

|

|

|

Holds the epsilon to control merging of overlapping boxes. Refer to OpenCV |

|

|

|

The minimum number of boxes to return. |

|

|

|

Enables the area-to-hit ratio (ATHR) filter. The ATHR is calculated as: ATHR = sqrt(clusterArea) / nObjectsInCluster. |

|

|

|

The |

|

|

|

The clustering algorithm selection. ( |

|

|

|

The scale parameter, which should match the training configuration. |

|

|

|

Bounding box offset for both X and Y dimensions. |

ROS Topics Subscribed

ROS Topic |

Interface |

Description |

|---|---|---|

|

The tensor that represents the inferred aligned bounding boxes. |

ROS Topics Published

ROS Topic |

Interface |

Description |

|---|---|---|

|

Aligned image bounding boxes with detection class. |