Bi3D

The Bi3D DNN model performs stereo-depth estimation using binary classification for depth segmentation. Depth segmentation can be used to determine whether an obstacle is within a proximity field and to avoid collisions with obstacles during navigation.

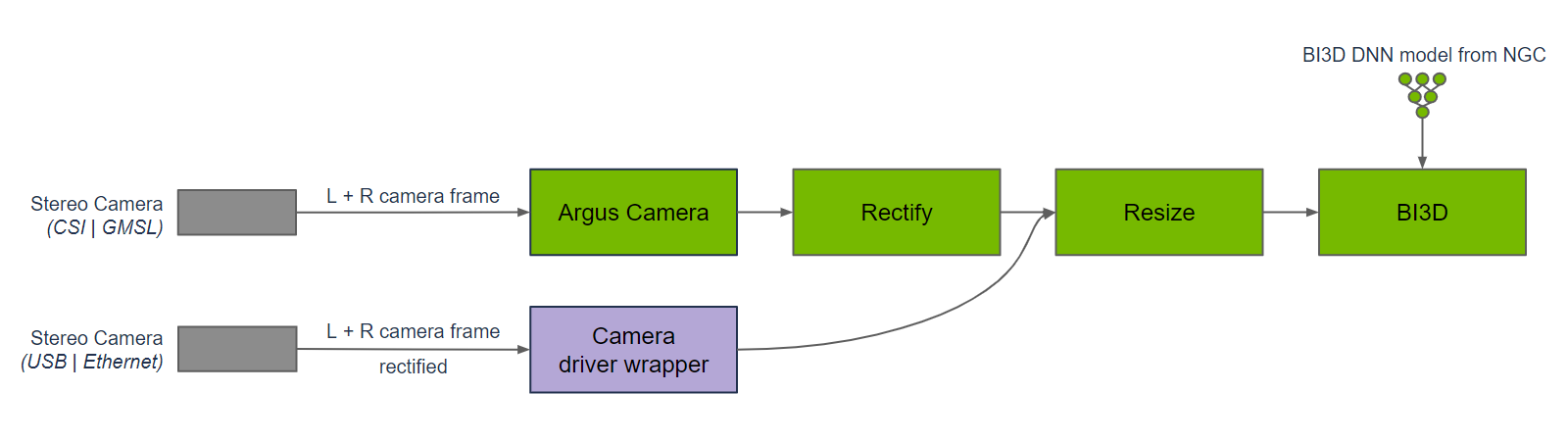

Bi3D is used in a graph of nodes to provide depth segmentation from a time-synchronized input left and right stereo image pair. Images to Bi3D need to be rectified and resized to the appropriate input resolution. The aspect ratio of the image needs to be maintained. A crop and resize might be required to maintain the input aspect ratio. The graph for DNN encode, to DNN inference, to DNN decode is part of the Bi3D node. Inference is performed using TensorRT, because the Bi3D DNN model is designed to use optimizations supported by TensorRT.

Compared to other stereo disparity functions, depth segmentation provides a prediction of whether an obstacle is within a proximity field, while simultaneously predicting freespace from the ground plane. Also unlike other stereo disparity functions in Isaac ROS, depth segmentation runs on NVIDIA DLA (deep learning accelerator), which is separate and independent from the GPU. For more information on disparity, refer to Binocular disparity.

Repositories and Packages

The Isaac ROS implementations of this technology are available here: