isaac_ros_bi3d

Source code on GitHub.

Quickstart

Set Up Development Environment

Set up your development environment by following the instructions in getting started.

Clone

isaac_ros_commonunder${ISAAC_ROS_WS}/src.cd ${ISAAC_ROS_WS}/src && \ git clone -b release-3.2 https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_common.git isaac_ros_common

(Optional) Install dependencies for any sensors you want to use by following the sensor-specific guides.

Note

We strongly recommend installing all sensor dependencies before starting any quickstarts. Some sensor dependencies require restarting the Isaac ROS Dev container during installation, which will interrupt the quickstart process.

Download Quickstart Assets

Download quickstart data from NGC:

Make sure required libraries are installed.

sudo apt-get install -y curl jq tar

Then, run these commands to download the asset from NGC:

NGC_ORG="nvidia" NGC_TEAM="isaac" PACKAGE_NAME="isaac_ros_bi3d" NGC_RESOURCE="isaac_ros_bi3d_assets" NGC_FILENAME="quickstart.tar.gz" MAJOR_VERSION=3 MINOR_VERSION=2 VERSION_REQ_URL="https://catalog.ngc.nvidia.com/api/resources/versions?orgName=$NGC_ORG&teamName=$NGC_TEAM&name=$NGC_RESOURCE&isPublic=true&pageNumber=0&pageSize=100&sortOrder=CREATED_DATE_DESC" AVAILABLE_VERSIONS=$(curl -s \ -H "Accept: application/json" "$VERSION_REQ_URL") LATEST_VERSION_ID=$(echo $AVAILABLE_VERSIONS | jq -r " .recipeVersions[] | .versionId as \$v | \$v | select(test(\"^\\\\d+\\\\.\\\\d+\\\\.\\\\d+$\")) | split(\".\") | {major: .[0]|tonumber, minor: .[1]|tonumber, patch: .[2]|tonumber} | select(.major == $MAJOR_VERSION and .minor <= $MINOR_VERSION) | \$v " | sort -V | tail -n 1 ) if [ -z "$LATEST_VERSION_ID" ]; then echo "No corresponding version found for Isaac ROS $MAJOR_VERSION.$MINOR_VERSION" echo "Found versions:" echo $AVAILABLE_VERSIONS | jq -r '.recipeVersions[].versionId' else mkdir -p ${ISAAC_ROS_WS}/isaac_ros_assets && \ FILE_REQ_URL="https://api.ngc.nvidia.com/v2/resources/$NGC_ORG/$NGC_TEAM/$NGC_RESOURCE/\ versions/$LATEST_VERSION_ID/files/$NGC_FILENAME" && \ curl -LO --request GET "${FILE_REQ_URL}" && \ tar -xf ${NGC_FILENAME} -C ${ISAAC_ROS_WS}/isaac_ros_assets && \ rm ${NGC_FILENAME} fi

Download a pre-trained Bi3D model:

mkdir -p ${ISAAC_ROS_WS}/isaac_ros_assets/models/bi3d_proximity_segmentation && \ cd ${ISAAC_ROS_WS}/isaac_ros_assets/models/bi3d_proximity_segmentation && \ wget 'https://api.ngc.nvidia.com/v2/models/nvidia/isaac/bi3d_proximity_segmentation/versions/2.0.0/files/featnet.onnx' && wget 'https://api.ngc.nvidia.com/v2/models/nvidia/isaac/bi3d_proximity_segmentation/versions/2.0.0/files/segnet.onnx'

Build isaac_ros_bi3d

Launch the Docker container using the

run_dev.shscript:cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Install the prebuilt Debian package:

sudo apt-get update

sudo apt-get install -y ros-humble-isaac-ros-bi3d

Clone this repository under

${ISAAC_ROS_WS}/src:cd ${ISAAC_ROS_WS}/src && \ git clone -b release-3.2 https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_depth_segmentation.git isaac_ros_depth_segmentation

Launch the Docker container using the

run_dev.shscript:cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Use

rosdepto install the package’s dependencies:sudo apt-get update

rosdep update && rosdep install --from-paths ${ISAAC_ROS_WS}/src/isaac_ros_depth_segmentation/isaac_ros_bi3d --ignore-src -y

Build the package from source:

cd ${ISAAC_ROS_WS}/ && \ colcon build --symlink-install --packages-up-to isaac_ros_bi3d --base-paths ${ISAAC_ROS_WS}/src/isaac_ros_depth_segmentation/isaac_ros_bi3d

Source the ROS workspace:

Note

Make sure to repeat this step in every terminal created inside the Docker container.

Since this package was built from source, the enclosing workspace must be sourced for ROS to be able to find the package’s contents.

source install/setup.bash

Run Launch File

Convert the

.onnxmodel files to TensorRT engine plan files:/usr/src/tensorrt/bin/trtexec --saveEngine=${ISAAC_ROS_WS}/isaac_ros_assets/models/bi3d_proximity_segmentation/featnet.plan \ --onnx=${ISAAC_ROS_WS}/isaac_ros_assets/models/bi3d_proximity_segmentation/featnet.onnx --int8 && /usr/src/tensorrt/bin/trtexec --saveEngine=${ISAAC_ROS_WS}/isaac_ros_assets/models/bi3d_proximity_segmentation/segnet.plan \ --onnx=${ISAAC_ROS_WS}/isaac_ros_assets/models/bi3d_proximity_segmentation/segnet.onnx --int8

Note

The engine plans generated using the x86_64 commands will also work on Jetson, but performance will be reduced.

On Jetson platforms, generate engine plans with DLA support enabled:

/usr/src/tensorrt/bin/trtexec \ --saveEngine=${ISAAC_ROS_WS}/isaac_ros_assets/models/bi3d_proximity_segmentation/featnet.plan \ --onnx=${ISAAC_ROS_WS}/isaac_ros_assets/models/bi3d_proximity_segmentation/featnet.onnx \ --int8 --useDLACore=0 --allowGPUFallback && /usr/src/tensorrt/bin/trtexec \ --saveEngine=${ISAAC_ROS_WS}/isaac_ros_assets/models/bi3d_proximity_segmentation/segnet.plan \ --onnx=${ISAAC_ROS_WS}/isaac_ros_assets/models/bi3d_proximity_segmentation/segnet.onnx \ --int8 --useDLACore=0 --allowGPUFallback

Continuing inside the container, install the following dependencies:

sudo apt-get update

sudo apt-get install -y ros-humble-isaac-ros-examples

Run the following launch file to spin up a demo of this package:

ros2 launch isaac_ros_examples isaac_ros_examples.launch.py \ launch_fragments:=bi3d \ interface_specs_file:=${ISAAC_ROS_WS}/isaac_ros_assets/isaac_ros_bi3d/rosbag_quickstart_interface_specs.json \ featnet_engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/bi3d_proximity_segmentation/featnet.plan \ segnet_engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/bi3d_proximity_segmentation/segnet.plan \ max_disparity_values:=10

Open a second terminal inside the Docker container:

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Play the rosbag file to simulate image streams from the cameras:

ros2 bag play --loop ${ISAAC_ROS_WS}/isaac_ros_assets/isaac_ros_bi3d/bi3dnode_rosbag

Ensure that you have already set up your RealSense camera using the RealSense setup tutorial. If you have not, please set up the sensor and then restart this quickstart from the beginning.

Continuing inside the Docker container, install dependencies:

sudo apt-get install -y ros-humble-isaac-ros-examples ros-humble-isaac-ros-realsense

Continuing inside the Docker container, run the following launch file to spin up a demo of this package using a RealSense camera:

ros2 launch isaac_ros_examples isaac_ros_examples.launch.py \ launch_fragments:=realsense_stereo_rect,bi3d \ interface_specs_file:=${ISAAC_ROS_WS}/isaac_ros_assets/isaac_ros_bi3d/realsense_quickstart_interface_specs.json \ featnet_engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/bi3d_proximity_segmentation/featnet.plan \ segnet_engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/bi3d_proximity_segmentation/segnet.plan \ max_disparity_values:=10

Ensure that you have already set up your Hawk camera using the Hawk setup tutorial. If you have not, please set up the sensor and then restart this quickstart from the beginning.

Continuing inside the Docker container, install dependencies:

sudo apt-get install -y ros-humble-isaac-ros-examples ros-humble-isaac-ros-argus-camera ros-humble-isaac-ros-image-proc

Run the following launch file to spin up a demo of this package using a Hawk camera:

ros2 launch isaac_ros_examples isaac_ros_examples.launch.py \ launch_fragments:=argus_stereo,resize_rectify_stereo,bi3d \ interface_specs_file:=${ISAAC_ROS_WS}/isaac_ros_assets/isaac_ros_bi3d/hawk_quickstart_interface_specs.json \ featnet_engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/bi3d_proximity_segmentation/featnet.plan \ segnet_engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/bi3d_proximity_segmentation/segnet.plan \ max_disparity_values:=10

Ensure that you have already set up your ZED camera using ZED setup tutorial.

Continuing inside the Docker container, install dependencies:

sudo apt-get update

sudo apt-get install -y ros-humble-isaac-ros-examples ros-humble-isaac-ros-stereo-image-proc ros-humble-isaac-ros-zed

Run the following launch file to spin up a demo of this package using a ZED Camera:

ros2 launch isaac_ros_examples isaac_ros_examples.launch.py \ launch_fragments:=zed_stereo_rect,bi3d \ featnet_engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/bi3d_proximity_segmentation/featnet.plan \ segnet_engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/bi3d_proximity_segmentation/segnet.plan \ max_disparity_values:=10 \ interface_specs_file:=${ISAAC_ROS_WS}/isaac_ros_assets/isaac_ros_bi3d/zed2_quickstart_interface_specs.json

Note

If you are using the ZED X series, replace zed2_quickstart_interface_specs.json with zedx_quickstart_interface_specs.json in the above command.

Visualize Results

Open two new terminals inside the Docker container for visualization:

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Visualize the output.

Start disparity visualizer:

ros2 run isaac_ros_bi3d isaac_ros_bi3d_visualizer.py --max_disparity_value 30

Start image visualizer:

ros2 run image_view image_view --ros-args -r image:=right/image_rect

Try More Examples

To continue your exploration, check out the following suggested examples:

Model Preparation

Download Pre-trained Models (.onnx) from NGC

The following steps show how to download pre-trained Bi3D DNN inference models.

The following model files must be downloaded to perform Bi3D inference. From File Browser on the Bi3D page, select the following

.onnxmodel files in the FILE list and copy thewgetcommand by clicking … in the ACTIONS column:featnet.onnxsegnet.onnx

Run each of the copied commands in a terminal to download the ONNX model file, as shown in the example below:

wget 'https://api.ngc.nvidia.com/v2/models/nvidia/isaac/bi3d_proximity_segmentation/versions/2.0.0/files/featnet.onnx' && wget 'https://api.ngc.nvidia.com/v2/models/nvidia/isaac/bi3d_proximity_segmentation/versions/2.0.0/files/segnet.onnx'

Bi3D Featnet is a network that extracts features from stereo images.

Bi3D Segnet is an encoder-decoder segmentation network that generates a binary segmentation confidence map.

Convert the Pre-trained Models (.onnx) to TensorRT Engine Plans

trtexec is used to convert pre-trained models (.onnx) to the

TensorRT engine plan and is included in the Isaac ROS docker container

under /usr/src/tensorrt/bin/trtexec.

Tip: Use

/usr/src/tensorrt/bin/trtexec -hfor more information on using the tool.

Generating Engine Plans for Jetson

/usr/src/tensorrt/bin/trtexec --onnx=<PATH_TO_ONNX_MODEL_FILE> --saveEngine=<PATH_TO_WHERE_TO_SAVE_ENGINE_PLAN> --useDLACore=<SET_CORE_TO_ENABLE_DLA> --int8 --allowGPUFallback

Generating Engine Plans for x86_64

/usr/src/tensorrt/bin/trtexec --onnx=<PATH_TO_ONNX_MODEL_FILE> --saveEngine=<PATH_TO_WHERE_TO_SAVE_ENGINE_PLAN> --int8

Troubleshooting

Isaac ROS Troubleshooting

For solutions to problems with Isaac ROS, please check here.

Deep Learning Troubleshooting

For solutions to problems with using DNN models, please check here.

API

Usage

ros2 launch isaac_ros_bi3d isaac_ros_bi3d.launch.py featnet_engine_file_path:=<PATH_TO_FEATNET_ENGINE> \

segnet_engine_file_path:=<PATH_TO_SEGNET_ENGINE \

max_disparity_values:=<MAX_NUMBER_OF_DISPARITY_VALUES_USED> \

image_height:=<INPUT_IMAGE_HEIGHT> \

image_width:=<INPUT_IMAGE_WIDTH>

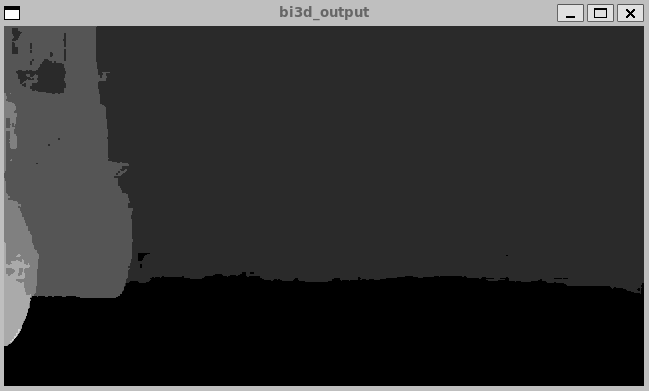

Interpreting the Output

The isaas_ros_bi3d package outputs a disparity image given a list of

disparity values (planes). Each pixel of the output image that is not

freespace is set to the value of the closest disparity plane (largest

disparity value) that the pixel is deemed to be in front of. Each pixel

that is predicted to be freespace is set to 0 (the furthest

disparity/smallest disparity value). Freespace is defined as the region

from the bottom of the image, up to the first pixel above which is not

the ground plane. To find the boundary between freespace and

not-freespace, one may start from the bottom of the image and, per

column, find the first pixel that is not the ground plane. In the below

example, the freespace of the image is shown in black:

The prediction of freespace eliminates the need for ground plane removal

in the output image as a post-processing step, which is often applied to

other stereo disparity functions. The output of isaas_ros_bi3d can

be used to check if any pixels within the image breach a given proximity

field by checking the values of all pixels. If a pixel value (disparity

value) is larger than the disparity plane defining the proximity field,

then it has breached that field. If a pixel does not breach any of the

provided disparity planes, it is assigned a value of 0.

ROS Parameters

ROS Parameter |

Type |

Default |

Description |

|---|---|---|---|

|

|

|

The height of the input image |

|

|

|

The width of the input image |

|

|

|

The path to the Bi3D Featnet engine plan |

|

|

|

The path to the Bi3D Segnet engine plan |

|

|

|

The maximum number of disparity values used for Bi3D inference. Isaac ROS Depth Segmentation supports up to a theoretical maximum of 64 disparity values during inference. However, the maximum length of disparities that a user may run in practice is dependent on the user’s hardware and availability of memory. |

|

|

|

The specific threshold disparity values used for Bi3D inference. The number of disparity values must not exceed the value set in the |

ROS Topics Subscribed

ROS Topic |

Interface |

Description |

|---|---|---|

|

|

|

|

|

|

|

Focal length populated in the Bi3D output disparity is extracted from this topic. |

|

|

Baseline populated in the Bi3D output disparity is extracted from this topic. |

Note

The images on input topics (left_image_bi3d and right_image_bi3d) should be a color image in rgb8 format.

ROS Topics Published

ROS Topic |

Interface |

Description |

|---|---|---|

|

The depth segmentation of Bi3D given as a disparity image. For pixels not deemed freespace, their value is set to the closest (largest) disparity plane that is breached. A pixel value is set to 0 if it does not breach any disparity plane or if it is freespace. |