Isaac Manipulator Reference Architecture

What is NVIDIA Isaac Manipulator?

NVIDIA Isaac Manipulator is a collection of GPU-accelerated libraries and Isaac ROS packages for perception-driven manipulation using robotic arms. The targeted use-cases range from automated inspection to pick-and-place for logistics and manufacturing. Isaac Manipulator provides capabilities for object detection, object pose estimation, and time-optimal collision-free motion generation using cuMotion. cuMotion is a GPU-accelerated library developed by NVIDIA for computing time-optimal, minimal-jerk trajectories for serial robot arms. These modular and extensible capabilities allow developers to integrate any combination of packages into their existing pipelines to accelerate tasks.

What is this Reference Architecture?

This reference architecture explains the various components that are part of Isaac Manipulator. It includes a validated reference architecture that has been tested and validated to run on NVIDIA Jetson Orin platforms and Ampere or higher NVIDIA GPUs.

Who is this document for?

This document is to assist engineers in the robotic platform ecosystem, including those at Original Equipment Manufacturers (OEMs), Systems Integrators (SIs), and Independent Software Vendors (ISVs). It provides guidance on utilizing the reference architecture as a starting point to develop robust and performant manipulation solutions for robotic arms.

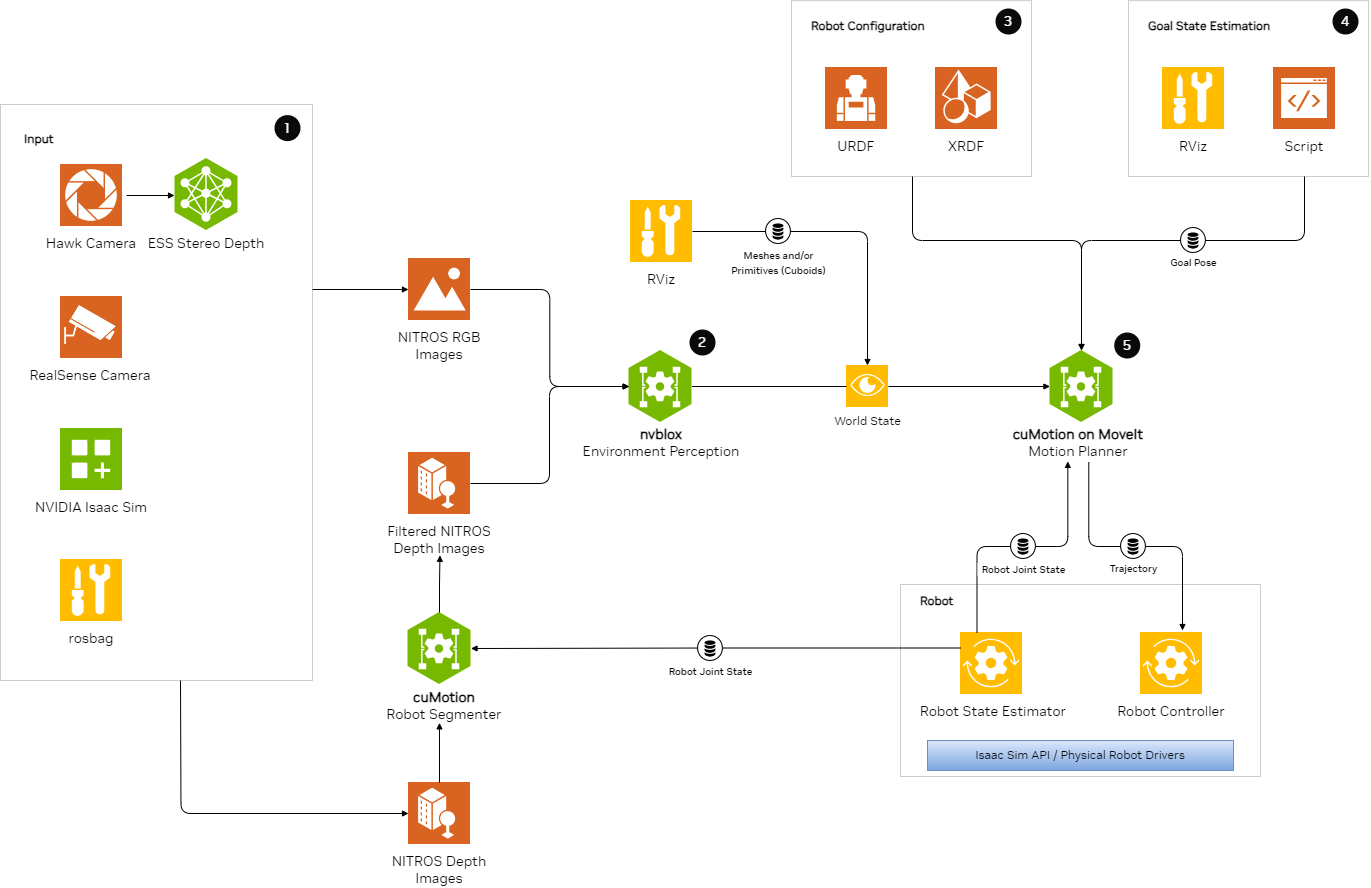

Reference Architecture

The Reference Architecture for Isaac Manipulator includes the following components.

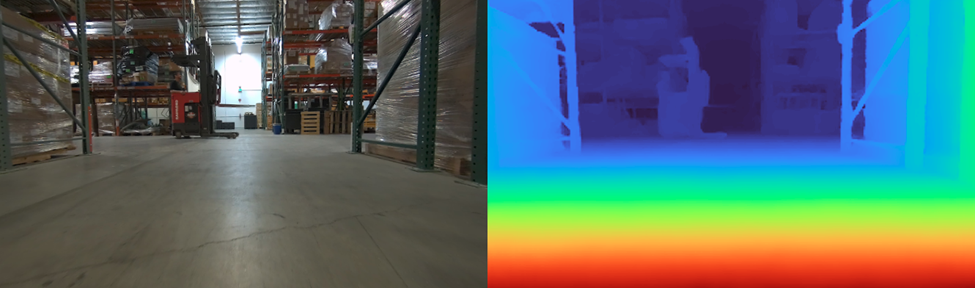

Visual Input: Generates RGB and Depth images using live or simulated stereo cameras. This component uses the ESS depth estimation model to generate depth images when using RGB cameras.

Environment and Obstacle Perception: Integrates RGB images, depth images, camera poses, and segmentation masks to create environment representation. This component uses Nvblox for 3D scene reconstruction.

Robot Configuration: Provides robot description.

Goal State Estimation: Provides desired goal pose to achieve.

Motion Planner: Generates trajectory for collision-free motion to goal. This component uses the cuMotion library for generating time-optimal trajectories.

Hardware Platform: Includes list of supported compute platforms and robot arm manipulators.

This architecture is shown in the following diagram:

The components in the reference architecture represent functional blocks that can have multiple implementations. The primary implementation for each component has undergone testing by NVIDIA.

Components

This section details the components used in Isaac Manipulator and provides links to tested examples you can run out of the box.

Component 1 - Visual Input and depth estimation

This component is responsible for supplying the RGB and depth images required by the Environment and Obstacle Perception (2) component.

In the reference architecture, this component is implemented using:

RGB camera: HAWK stereo cameras for capturing RGB images. The RGB images provided by these cameras are processed by the ESS Depth Estimation model, which generates the depth images required for environment perception.

RGBD camera: The Intel RealSense camera provides RGBD images, which can be used directly by the environment perception component.

There are other ways visual input can be provided to the workflow. These include the following input sources:

Rosbag: Running with pre-recorded data from a rosbag.

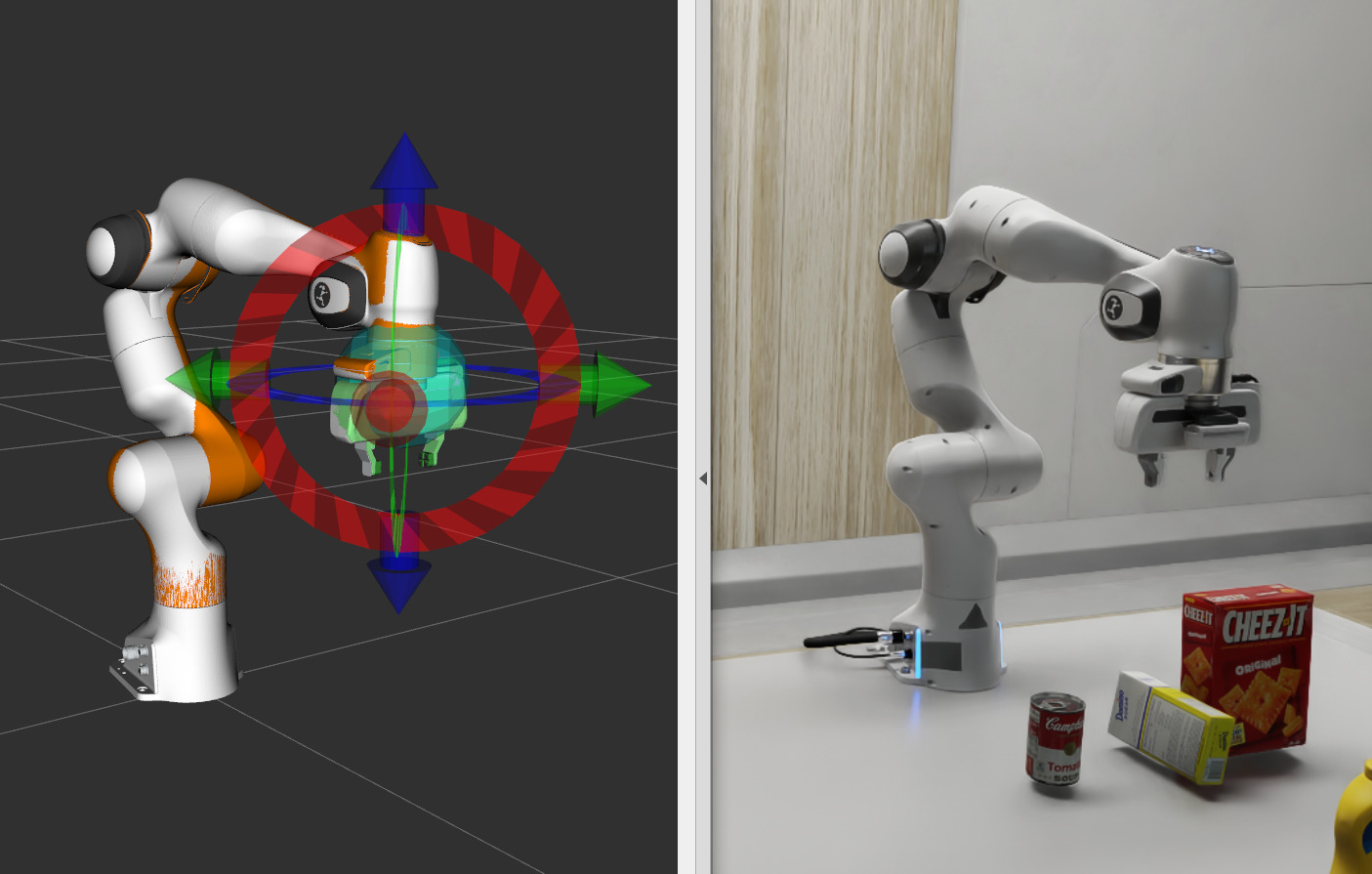

NVIDIA Isaac Sim: The tutorial below shows how to use Isaac Manipulator with Isaac Sim. It demonstrates trajectory planning for a Franka robot simulated in Isaac Sim.

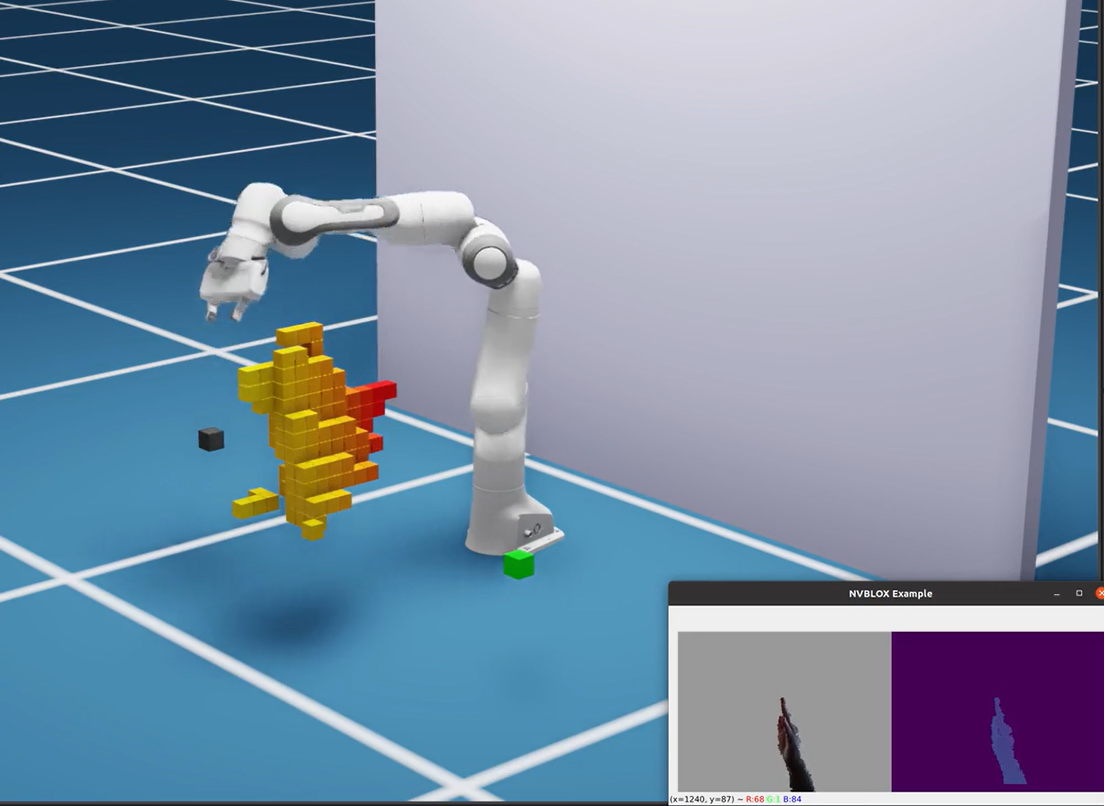

Component 2 - Nvblox for Environment and Obstacle Perception

This component provides a representation of the environment and obstacles in it for avoiding collisions during robot arm motion.

In the reference architecture, this obstacle representation is created by Nvblox, a library for 3D scene reconstruction. Nvblox processes RGB images and depth images from the Visual Input (component 1) to produce cuboids or meshes representing obstacles. This is utilized by the Motion Planner (component 5) for planning collision-free motion.

Camera calibration is required while using Nvblox, which is done using the MoveIt Calibration package. For information on how to use this, please visit the link for setting up a UR5e robot below.

Useful Links

Component 3 - Robot Configuration

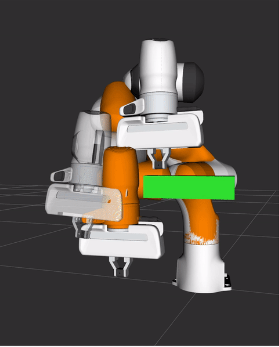

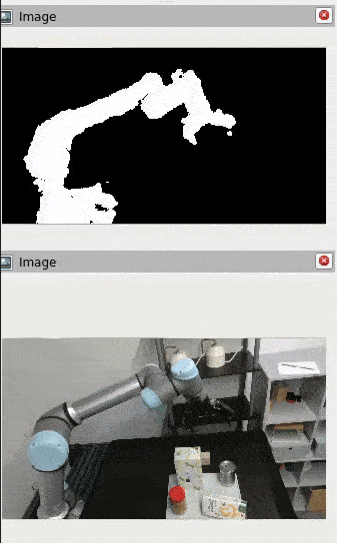

This component provides the robot configuration to cuMotion - the Motion Planner (component 5). This configuration is used to generate motion and perform segmentation to filter out the robot arm.

Two files are required:

URDF (Universal Robot Description Format) file - for basic kinematics.

2. XRDF (Extended Robot Description Format) file - for collision geometry, definition of the configuration space, and more data. Please see Robot Configuration below.

Useful Links

Component 4 - Goal State Estimation

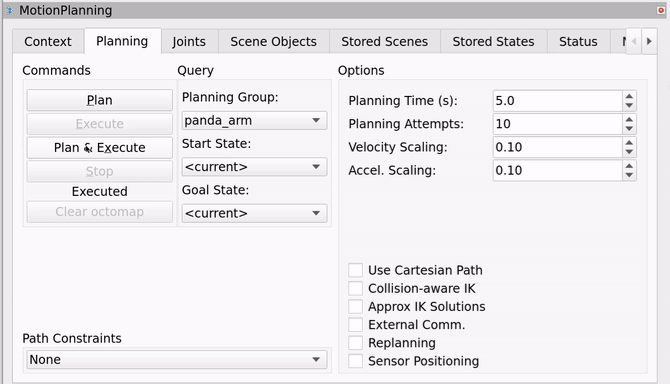

This component provides an interface for users to give a goal pose to the robot arm. In the reference architecture, this is done using RViz or via Python scripts.

Below is the MotionPlanning panel on RViz from where you can enable cuMotion and select a target goal pose for the robot.

Refer to the Isaac ROS MoveIt goal setter on how to provide the goal state using scripts.

Useful Links

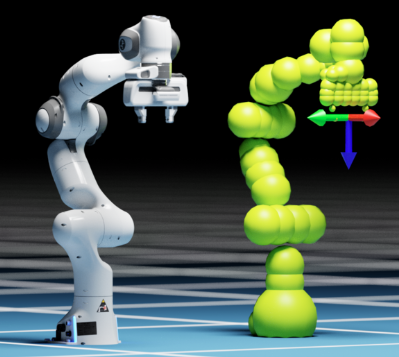

Component 5 - Motion Planner

This is the key component in the Isaac Manipulator pipeline - cuMotion.

The environment state (from component 2), robot configuration (from component 3) and goal pose (from component 4) are passed as input to cuMotion. The cuMotion Planner’s motion generation functions are exposed via a plugin for MoveIt 2.

cuMotion includes functions to accurately segment and filter out parts of the robot. This eliminates misleading contributions from the robot itself while reconstructing obstacles in the environment.

cuMotion generates a time-optimal trajectory to the goal state considering obstacles in the environment. This joint-space trajectory is passed to the robot controller on the robot for execution. The robot controller is exposed via drivers on a physical robot or the Isaac Sim API in a simulated setup.

Component 6 - Hardware Platform

This component consists of hardware platforms and robot arm manipulators on which Isaac Manipulator has been tested:

Universal Robots UR5e manipulator

Universal Robots UR10e manipulator

Franka

Isaac Manipulator has been tested on the Jetson Orin platforms running JetPack 6 and on x86_64 systems with NVIDIA GPUs. See more in Supported Platforms below.

Integration with additional Isaac packages

Some use-cases would additionally benefit from the following Isaac packages. These can be integrated into existing pipelines that optionally may be using Isaac Manipulator components.

Isaac ROS Pose Estimation - estimating the 6 DoF pose of objects using FoundationPose. FoundationPose is a pre-trained model that works on different, novel objects without retraining. The output includes object pose and tracking information, which can be used by manipulator grippers to calculate grasps for objects.

Isaac ROS Object Detection - detecting objects using SyntheticaDETR trained models. SyntheticaDETR is trained exclusively on synthetically generated data using NVIDIA Omniverse. This package can be used individually for object detection and the output bounding boxes can also be used as initial estimates for FoundationPose when used together.

Useful Links

Summary

This reference architecture for Isaac Manipulator explains components and examples that have undergone testing, and provide a robust foundation for developing end-use case applications. Individual components of Isaac Manipulator can be used together and integrated to meet diverse application requirements.

Additional Resources

Check out our resources on using Isaac Manipulator with your robots.

Learn more about featured NVIDIA solutions:

Review Documentation & Samples:

Build your business case:

See how Intrinsic.ai uses Isaac Manipulator to generate grasp poses and robot motions, generating motion plans within 30 milliseconds.

See how to design, create, and deploy robotics applications using NVIDIA Isaac.