isaac_ros_foundationpose

Source code on GitHub.

Quickstart

Set Up Development Environment

Set up your development environment by following the instructions in getting started.

Clone

isaac_ros_commonunder${ISAAC_ROS_WS}/src.cd ${ISAAC_ROS_WS}/src && \ git clone -b release-3.2 https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_common.git isaac_ros_common

(Optional) Install dependencies for any sensors you want to use by following the sensor-specific guides.

Note

We strongly recommend installing all sensor dependencies before starting any quickstarts. Some sensor dependencies require restarting the Isaac ROS Dev container during installation, which will interrupt the quickstart process.

Download Quickstart Assets

Download quickstart data from NGC:

Make sure required libraries are installed.

sudo apt-get install -y curl jq tar

Then, run these commands to download the asset from NGC:

NGC_ORG="nvidia" NGC_TEAM="isaac" PACKAGE_NAME="isaac_ros_foundationpose" NGC_RESOURCE="isaac_ros_foundationpose_assets" NGC_FILENAME="quickstart.tar.gz" MAJOR_VERSION=3 MINOR_VERSION=2 VERSION_REQ_URL="https://catalog.ngc.nvidia.com/api/resources/versions?orgName=$NGC_ORG&teamName=$NGC_TEAM&name=$NGC_RESOURCE&isPublic=true&pageNumber=0&pageSize=100&sortOrder=CREATED_DATE_DESC" AVAILABLE_VERSIONS=$(curl -s \ -H "Accept: application/json" "$VERSION_REQ_URL") LATEST_VERSION_ID=$(echo $AVAILABLE_VERSIONS | jq -r " .recipeVersions[] | .versionId as \$v | \$v | select(test(\"^\\\\d+\\\\.\\\\d+\\\\.\\\\d+$\")) | split(\".\") | {major: .[0]|tonumber, minor: .[1]|tonumber, patch: .[2]|tonumber} | select(.major == $MAJOR_VERSION and .minor <= $MINOR_VERSION) | \$v " | sort -V | tail -n 1 ) if [ -z "$LATEST_VERSION_ID" ]; then echo "No corresponding version found for Isaac ROS $MAJOR_VERSION.$MINOR_VERSION" echo "Found versions:" echo $AVAILABLE_VERSIONS | jq -r '.recipeVersions[].versionId' else mkdir -p ${ISAAC_ROS_WS}/isaac_ros_assets && \ FILE_REQ_URL="https://api.ngc.nvidia.com/v2/resources/$NGC_ORG/$NGC_TEAM/$NGC_RESOURCE/\ versions/$LATEST_VERSION_ID/files/$NGC_FILENAME" && \ curl -LO --request GET "${FILE_REQ_URL}" && \ tar -xf ${NGC_FILENAME} -C ${ISAAC_ROS_WS}/isaac_ros_assets && \ rm ${NGC_FILENAME} fi

Download the pre-trained FoundationPose models from NGC to the required directory:

mkdir -p ${ISAAC_ROS_WS}/isaac_ros_assets/models/foundationpose && \ cd ${ISAAC_ROS_WS}/isaac_ros_assets/models/foundationpose && \ wget 'https://api.ngc.nvidia.com/v2/models/nvidia/isaac/foundationpose/versions/1.0.0_onnx/files/refine_model.onnx' -O refine_model.onnx && \ wget 'https://api.ngc.nvidia.com/v2/models/nvidia/isaac/foundationpose/versions/1.0.0_onnx/files/score_model.onnx' -O score_model.onnx

Build isaac_ros_foundationpose

Launch the Docker container using the

run_dev.shscript:cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Install the prebuilt Debian package:

sudo apt-get update

sudo apt-get install -y ros-humble-isaac-ros-foundationpose

Clone this repository under

${ISAAC_ROS_WS}/src:cd ${ISAAC_ROS_WS}/src && \ git clone -b release-3.2 https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_pose_estimation.git isaac_ros_pose_estimation

Launch the Docker container using the

run_dev.shscript:cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Use

rosdepto install the package’s dependencies:sudo apt-get update

rosdep update && rosdep install --from-paths ${ISAAC_ROS_WS}/src/isaac_ros_pose_estimation/isaac_ros_foundationpose --ignore-src -y

Build the package from source:

cd ${ISAAC_ROS_WS}/ && \ colcon build --symlink-install --packages-up-to isaac_ros_foundationpose --base-paths ${ISAAC_ROS_WS}/src/isaac_ros_pose_estimation/isaac_ros_foundationpose

Source the ROS workspace:

Note

Make sure to repeat this step in every terminal created inside the Docker container.

Because this package was built from source, the enclosing workspace must be sourced for ROS to be able to find the package’s contents.

source install/setup.bash

Run Launch File

Inside the container, convert models from

.onnxto TensorRT engine plans:Convert the refine model:

/usr/src/tensorrt/bin/trtexec --onnx=${ISAAC_ROS_WS}/isaac_ros_assets/models/foundationpose/refine_model.onnx --saveEngine=${ISAAC_ROS_WS}/isaac_ros_assets/models/foundationpose/refine_trt_engine.plan --minShapes=input1:1x160x160x6,input2:1x160x160x6 --optShapes=input1:1x160x160x6,input2:1x160x160x6 --maxShapes=input1:42x160x160x6,input2:42x160x160x6

Convert the score model:

/usr/src/tensorrt/bin/trtexec --onnx=${ISAAC_ROS_WS}/isaac_ros_assets/models/foundationpose/score_model.onnx --saveEngine=${ISAAC_ROS_WS}/isaac_ros_assets/models/foundationpose/score_trt_engine.plan --minShapes=input1:1x160x160x6,input2:1x160x160x6 --optShapes=input1:1x160x160x6,input2:1x160x160x6 --maxShapes=input1:252x160x160x6,input2:252x160x160x6

Note

FoundationPose TensorRT engines are running with FP32 precision in TensorRT 10.3+ versions due to FP16 precision loss.

Note

At least 7.5 GB of free GPU memory space is required for model conversion.

The model conversion time varies across different platforms. On Jetson AGX Orin, the engine conversion process takes ~10-15 minutes to complete.

Complete the Isaac ROS RT-DETR tutorial.

Continuing inside the container, install the following dependencies:

sudo apt-get update

sudo apt-get install -y ros-humble-isaac-ros-examples

Run the following launch files to spin up a demo of this package:

Launch

isaac_ros_foundationpose:ros2 launch isaac_ros_examples isaac_ros_examples.launch.py launch_fragments:=foundationpose interface_specs_file:=${ISAAC_ROS_WS}/isaac_ros_assets/isaac_ros_foundationpose/quickstart_interface_specs.json mesh_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/isaac_ros_foundationpose/Mustard/textured_simple.obj texture_path:=${ISAAC_ROS_WS}/isaac_ros_assets/isaac_ros_foundationpose/Mustard/texture_map.png score_engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/foundationpose/score_trt_engine.plan refine_engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/foundationpose/refine_trt_engine.plan rt_detr_engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/synthetica_detr/sdetr_grasp.plan

Then open another terminal, and enter the Docker container again:

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Then, play the rosbag:

ros2 bag play -l ${ISAAC_ROS_WS}/isaac_ros_assets/isaac_ros_foundationpose/quickstart.bag/

Ensure that you have already set up your RealSense camera using the RealSense setup tutorial. If you have not, please set up the sensor and then restart this quickstart from the beginning.

Complete the Isaac ROS RT-DETR tutorial.

Open a new terminal and launch the Docker container using the

run_dev.shscript:cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Install the following dependencies:

sudo apt-get update

sudo apt-get install -y ros-humble-isaac-ros-examples ros-humble-isaac-ros-realsense

Place the object in front of the camera and run the launch file:

ros2 launch isaac_ros_examples isaac_ros_examples.launch.py launch_fragments:=realsense_mono_rect_depth,foundationpose mesh_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/isaac_ros_foundationpose/Mac_and_cheese_0_1/Mac_and_cheese_0_1.obj texture_path:=${ISAAC_ROS_WS}/isaac_ros_assets/isaac_ros_foundationpose/Mac_and_cheese_0_1/materials/textures/baked_mesh_tex0.png score_engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/foundationpose/score_trt_engine.plan refine_engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/foundationpose/refine_trt_engine.plan rt_detr_engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/synthetica_detr/sdetr_grasp.plan

Ensure that you have already set up your Hawk camera using the Hawk setup tutorial. If you have not, please set up the sensor and then restart this quickstart from the beginning.

Complete the Isaac ROS RT-DETR tutorial.

Open a new terminal and launch the Docker container using the

run_dev.shscript:

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Install the following dependencies:

sudo apt-get update

sudo apt-get install -y ros-humble-isaac-ros-examples ros-humble-isaac-ros-argus-camera

Place the object in front of the hawk camera and run the launch file:

ros2 launch isaac_ros_examples isaac_ros_examples.launch.py launch_fragments:=argus_depth,foundationpose mesh_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/isaac_ros_foundationpose/Mac_and_cheese_0_1/Mac_and_cheese_0_1.obj texture_path:=${ISAAC_ROS_WS}/isaac_ros_assets/isaac_ros_foundationpose/Mac_and_cheese_0_1/materials/textures/baked_mesh_tex0.png score_engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/foundationpose/score_trt_engine.plan refine_engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/foundationpose/refine_trt_engine.plan rt_detr_engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/synthetica_detr/sdetr_grasp.plan

Note

The Hawk pipeline may take more than 10s for pose estimation on some complicated objects.

Visualize Results

Open a new terminal inside the Docker container:

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

launch

RViz2to visualize the outputrviz2 -d $(ros2 pkg prefix isaac_ros_foundationpose --share)/rviz/foundationpose.rviz

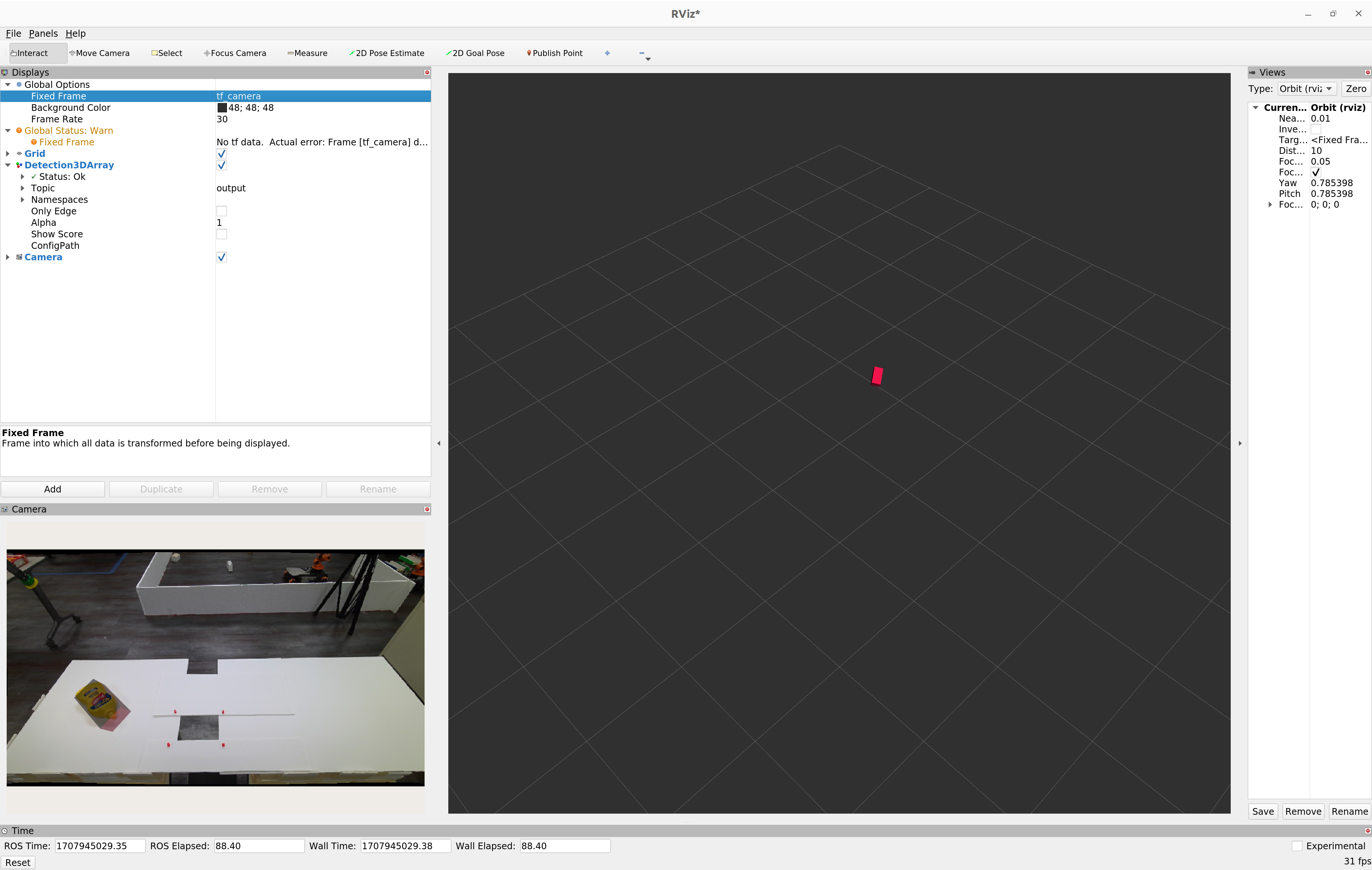

You should see a

RViz2window open as shown below showing the 3D bounding box overlaid over the input image

launch

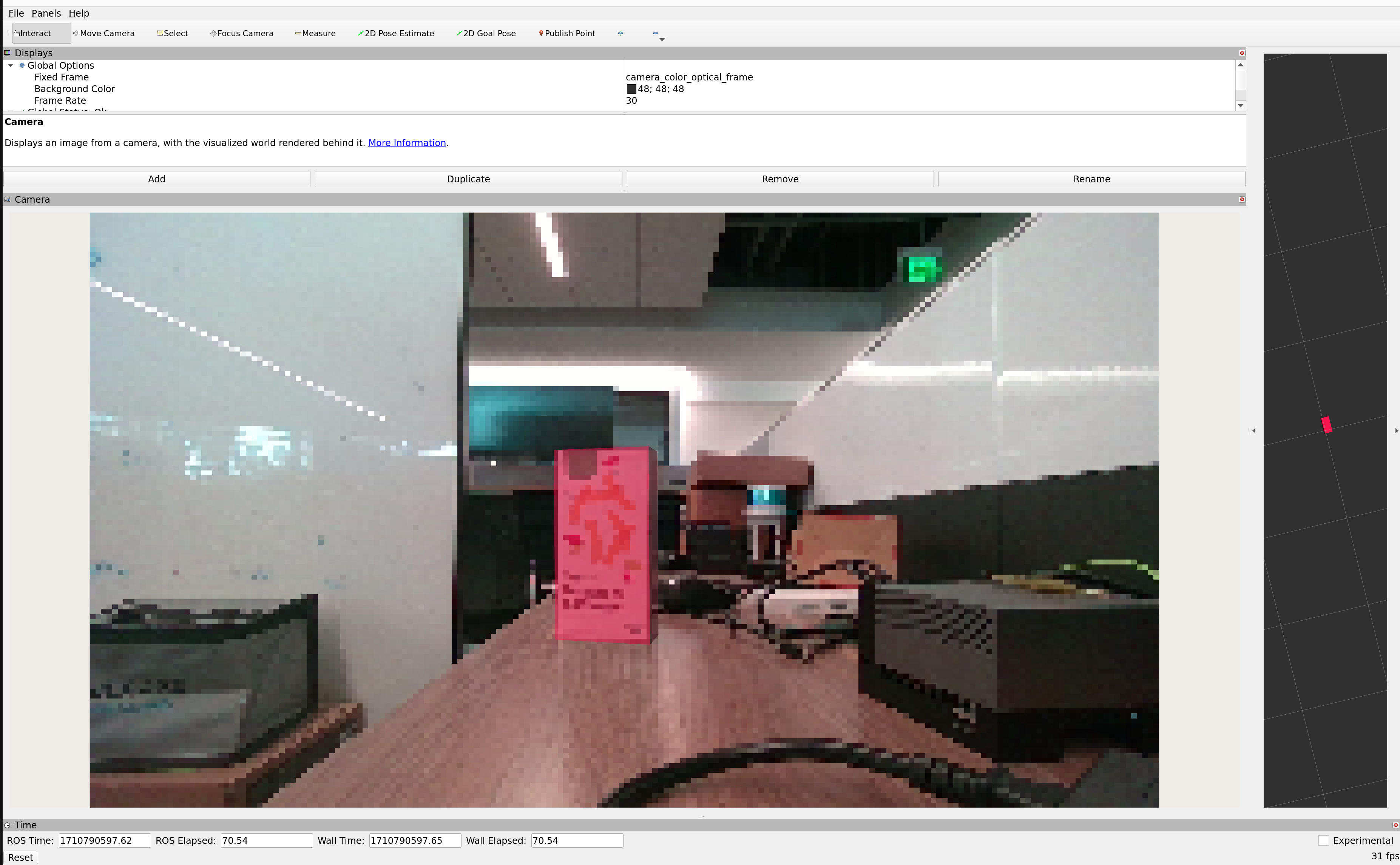

RViz2to visualize the outputrviz2 -d $(ros2 pkg prefix isaac_ros_foundationpose --share)/rviz/foundationpose_realsense.rviz

You should see a visualization of the 3D pose estimate as shown below:

launch

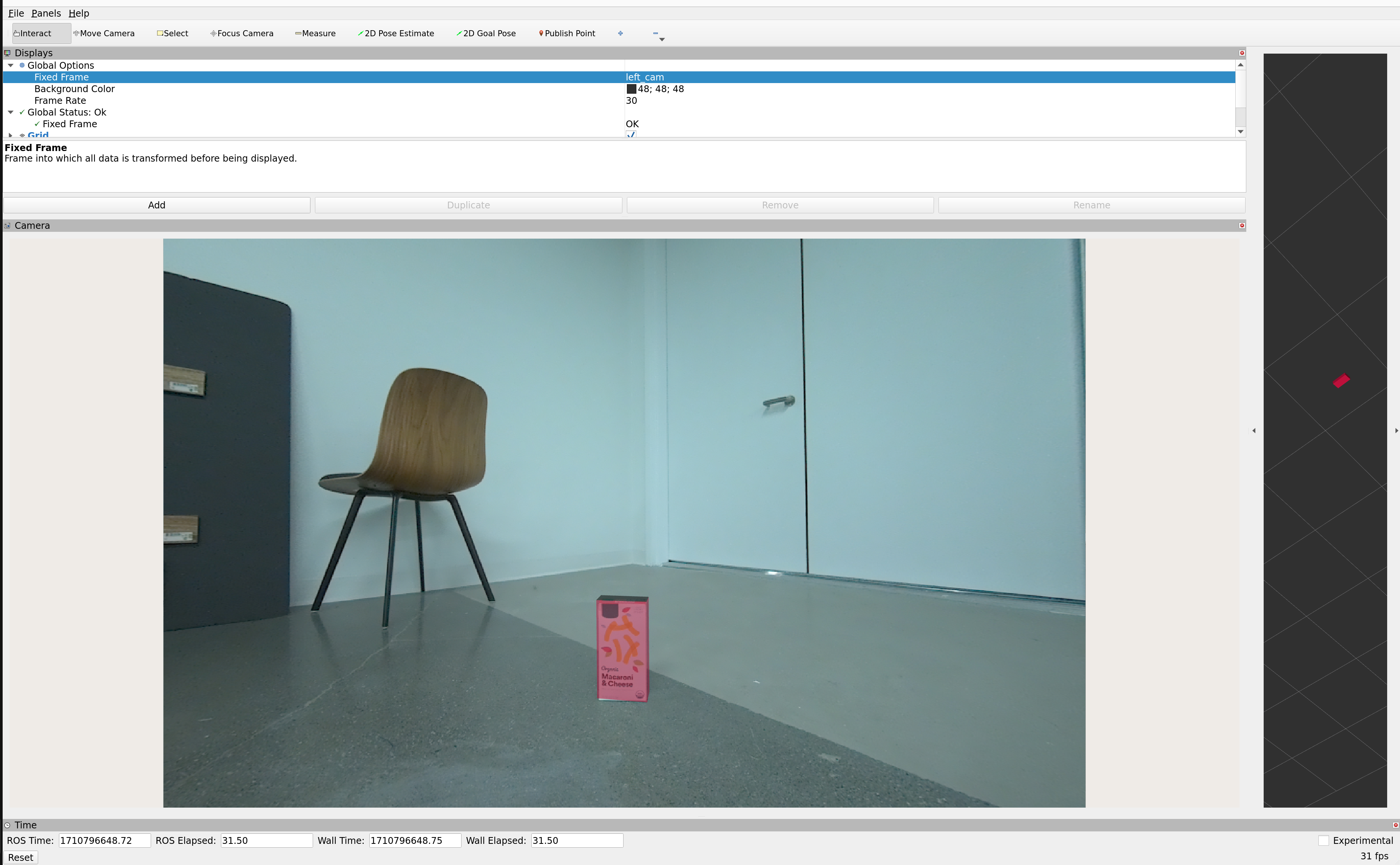

RViz2to visualize the outputrviz2 -d $(ros2 pkg prefix isaac_ros_foundationpose --share)/rviz/foundationpose_hawk.rviz

You should see a visualization of the 3D pose estimate as shown below:

Note

FoundationPose is designed to perform pose estimation on previously unseen objects without model retraining. As a result, inference requires significant GPU compute for first detection. Tracking once an initial pose estimation is determined can be significantly faster, however. Refer to performance of the model for your target platform to determine which model to use.

More powerful discrete GPUs can outperform all other platforms for this task and should be preferred if higher performance is required. Interleaving pose estimation with other tasks rather than running continuously can be a more effective solution as well. Finally, if runtime performance is critical and offline training resources are available, developers can train CenterPose for their own target objects using synthetic data generation and/or real-world data for faster pose estimation.

Try More Examples

To continue your exploration, check out the following suggested examples:

Note

FoundationPose expects the origin frame to be at the center of the mesh.

Troubleshooting

Object Detections are Offset from the Actual Object

Symptom

The object detections are offset from the actual object.

Solution

This may be because the vertices/faces in the .obj file is not at the center of the object. Rather, it could be at the corner/edge of the object. To fix this, follow this tutorial.

Isaac ROS Troubleshooting

For solutions to problems with Isaac ROS, please check here.

Deep Learning Troubleshooting

For solutions to problems with using DNN models, please check here.

API

Usage

ros2 launch isaac_ros_foundationpose isaac_ros_foundationpose.launch.py refine_engine_file_path:=<path to refine model .plan> score_engine_file_path:=<path to score model .plan> mesh_file_path:=<path to object mesh file> texture_path:=<path to texture map> launch_rviz:=<enable rviz> launch_bbox_to_mask:=<enable bbox to mask converter> mask_height:=<converted mask height> mask_width:=<converted mask width>

FoundationPose Node

ROS Parameters

ROS Parameter |

Type |

Default |

Description |

|---|---|---|---|

|

|

|

The absolute path to the target object mesh file. |

|

|

|

The absolute Path to the target object texture file. |

|

|

|

Minimum allowed Z-axis value of pointcloud. |

|

|

|

Minimum allowed X,Y,Z-axis value of pointcloud to threshold. |

|

|

|

The number of iterations applied to the refinement model can enhance accuracy. However, more iterations will take longer time for processing. |

|

|

|

Rotational symmetries around axes with angles in format ‘axis_angle’ (e.g., ‘x_30’ for 30 degrees around x-axis). Valid axes are [x, y, z]. Angles must be explicitly specified (e.g., ‘x_180’) or use ‘full’ for full rotation (evaluated at 30° increments). |

|

|

|

Deprecated: Use symmetry_axes instead. Adds 180-degree rotational symmetry around specified axes. Format: ‘axis’ (e.g., ‘x’ for 180-degree rotation around x-axis). Valid axes are [x, y, z]. |

|

|

|

Fixed poses constrain the orientation angles during pose estimation by specifying axis-angle pairs (e.g., x_30, y_45) that force rotations around specific axes to remain at the given values in degrees. |

|

|

|

Fixed translation components in format ‘axis_value’ (e.g., ‘x_0.1’, ‘y_0.2’, ‘z_0.3’). Valid axes are [x, y, z] for translation in x, y, z directions respectively. If provided, these will override the initial estimated translations. |

|

|

|

The absolute path to the refinement model file in the local file system. |

|

|

|

The absolute path to either where you want your refinement TensorRT engine plan to be generated or where your pre-generated engine plan file is located. |

|

|

|

The absolute path to your score model file in the local file system. |

|

|

|

The absolute path to either where you want your score TensorRT engine plan to be generated or where your pre-generated engine plan file is located. |

|

|

|

A list of tensor names of refinement model to be bound to specified input bindings names. Bindings occur in sequential order. |

|

|

|

A list of input tensor binding names specified by the refinement model. |

|

|

|

A list of tensor names of score model to be bound to specified input bindings names. Bindings occur in sequential order. |

|

|

|

A list of input tensor binding names specified by the score model. |

|

|

|

A list of tensor names to be bound to specified output binding names for the refine model. |

|

|

|

A list of output tensor binding names specified by the refine model. |

|

|

|

A list of tensor names to be bound to specified output binding names for the score model. |

|

|

|

A list of output tensor binding names specified by the score model. |

|

|

|

Name of the frame that is used when publishing the pose to the TF tree. |

|

|

|

Synchronization threshold in nanoseconds. |

|

|

|

When enabled, saves intermediate results to disk for debugging. |

|

|

|

Directory to save intermediate results for debugging. |

ROS Topics Subscribed

ROS Topic |

Interface |

Description |

|---|---|---|

|

The input depth image. |

|

|

The input segmentation mask. |

|

|

The input color image (rectified). |

|

|

The input image camera_info. |

ROS Topics Published

ROS Topic |

Interface |

Description |

|---|---|---|

|

The output pose estimate. |

|

|

The output pose matrix, used as the input for next frame tracking. |

FoundationPose Tracking Node

ROS Parameters

ROS Parameter |

Type |

Default |

Description |

|---|---|---|---|

|

|

|

The absolute path to the target object mesh file. |

|

|

|

The absolute Path to the target object texture file. |

|

|

|

Minimum allowed Z-axis value of pointcloud. |

|

|

|

Minimum allowed X,Y,Z-axis value of pointcloud to threshold. |

|

|

|

The absolute path to the refinement model file in the local file system. |

|

|

|

The absolute path to either where you want your refinement TensorRT engine plan to be generated or where your pre-generated engine plan file is located. |

|

|

|

A list of tensor names of refinement model to be bound to specified input bindings names. Bindings occur in sequential order. |

|

|

|

A list of input tensor binding names specified by the refinement model. |

|

|

|

A list of tensor names to be bound to specified output binding names for the refine model. |

|

|

|

A list of output tensor binding names specified by the refine model. |

|

|

|

Name of the frame that is used when publishing the pose to the TF tree. |

ROS Topics Subscribed

ROS Topic |

Interface |

Description |

|---|---|---|

|

The input depth image. |

|

|

The input pose estimation matrix from last frame. |

|

|

The input color image (rectified). |

|

|

The input image camera_info. |

ROS Topics Published

ROS Topic |

Interface |

Description |

|---|---|---|

|

The output pose estimate. |

|

|

The output pose matrix, used as the input for next frame tracking. |

FoundationPose Selector Node

ROS Parameters

ROS Parameter |

Type |

Default |

|---|---|---|

|

|

|

ROS Topics Subscribed

ROS Topic |

Interface |

Description |

|---|---|---|

|

The input depth image. |

|

|

The input segmentation mask. |

|

|

The input color image (rectified). |

|

|

The input image camera_info. |

|

|

The input pose matrix comes from last frame tracking. |

|

|

The input pose matrix comes from pose estimation. |

ROS Topics Published

ROS Topic |

Interface |

Description |

|---|---|---|

|

The output depth image for pose estimation. |

|

|

The output segmentation mask for pose estimation. |

|

|

The output color image (rectified) for pose estimation. |

|

|

The output image camera_info for pose estimation. |

|

|

The output segmentation mask for tracking. |

|

|

The output color image (rectified) for tracking. |

|

|

The output image camera_info for tracking. |

|

|

The output pose matrix, used as the input for next frame tracking. |

FoundationPose Detection2DToMask Node

ROS Parameters

ROS Parameter |

Type |

Default |

Description |

|---|---|---|---|

|

|

|

The output mask width. FoundationPose expects the mask to have the same dimensions as the RGB image. |

|

|

|

The output mask height. FoundationPose expects the mask to have the same dimensions as the RGB image. |

ROS Topics Subscribed

ROS Topic |

Interface |

Description |

|---|---|---|

|

The input Detection2D message. |

|

|

The input Detection2D Array message. The mask is created using the highest-scoring detection from the input Detection2D Array message. |

ROS Topics Published

ROS Topic |

Interface |

Description |

|---|---|---|

|

The output binary segmentation mask. |