isaac_ros_rtdetr

Source code on GitHub.

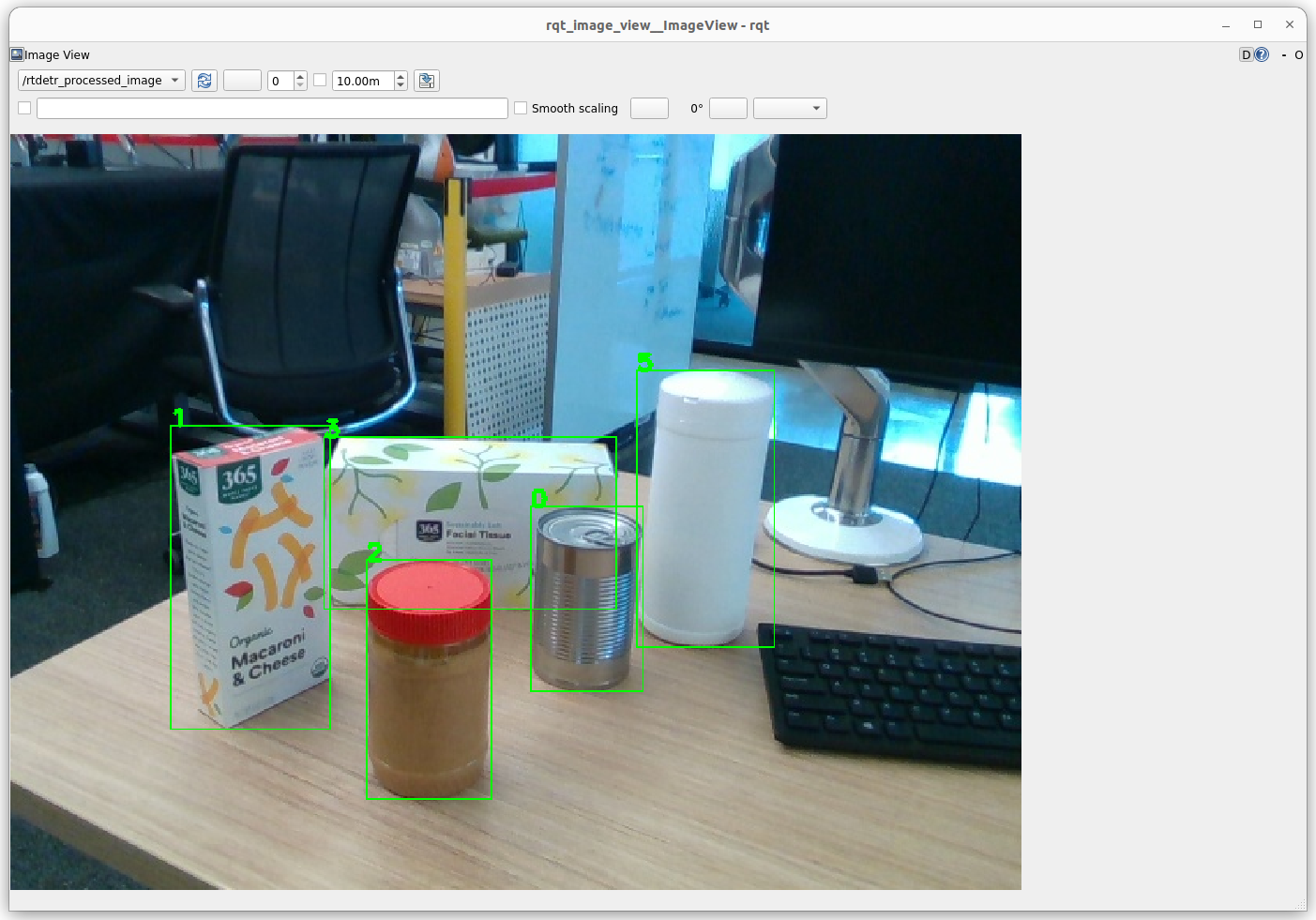

Stable object detections using SyntheticaDETR in a difficult scene with camera motion blur, round objects with few features, reflective object material, and light reflections

Quickstart

Set Up Development Environment

Set up your development environment by following the instructions in getting started.

Clone

isaac_ros_commonunder${ISAAC_ROS_WS}/src.cd ${ISAAC_ROS_WS}/src && \ git clone -b release-3.1 https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_common.git isaac_ros_common

(Optional) Install dependencies for any sensors you want to use by following the sensor-specific guides.

Warning

We strongly recommend installing all sensor dependencies before starting any quickstarts. Some sensor dependencies require restarting the Isaac ROS Dev container during installation, which will interrupt the quickstart process.

Download Quickstart Assets

Download quickstart data from NGC:

Make sure required libraries are installed.

sudo apt-get install -y curl jq tar

Then, run these commands to download the asset from NGC:

NGC_ORG="nvidia" NGC_TEAM="isaac" PACKAGE_NAME="isaac_ros_rtdetr" NGC_RESOURCE="isaac_ros_rtdetr_assets" NGC_FILENAME="quickstart.tar.gz" MAJOR_VERSION=3 MINOR_VERSION=1 VERSION_REQ_URL="https://catalog.ngc.nvidia.com/api/resources/versions?orgName=$NGC_ORG&teamName=$NGC_TEAM&name=$NGC_RESOURCE&isPublic=true&pageNumber=0&pageSize=100&sortOrder=CREATED_DATE_DESC" AVAILABLE_VERSIONS=$(curl -s \ -H "Accept: application/json" "$VERSION_REQ_URL") LATEST_VERSION_ID=$(echo $AVAILABLE_VERSIONS | jq -r " .recipeVersions[] | .versionId as \$v | \$v | select(test(\"^\\\\d+\\\\.\\\\d+\\\\.\\\\d+$\")) | split(\".\") | {major: .[0]|tonumber, minor: .[1]|tonumber, patch: .[2]|tonumber} | select(.major == $MAJOR_VERSION and .minor <= $MINOR_VERSION) | \$v " | sort -V | tail -n 1 ) if [ -z "$LATEST_VERSION_ID" ]; then echo "No corresponding version found for Isaac ROS $MAJOR_VERSION.$MINOR_VERSION" echo "Found versions:" echo $AVAILABLE_VERSIONS | jq -r '.recipeVersions[].versionId' else mkdir -p ${ISAAC_ROS_WS}/isaac_ros_assets && \ FILE_REQ_URL="https://api.ngc.nvidia.com/v2/resources/$NGC_ORG/$NGC_TEAM/$NGC_RESOURCE/\ versions/$LATEST_VERSION_ID/files/$NGC_FILENAME" && \ curl -LO --request GET "${FILE_REQ_URL}" && \ tar -xf ${NGC_FILENAME} -C ${ISAAC_ROS_WS}/isaac_ros_assets && \ rm ${NGC_FILENAME} fi

Download a pre-trained SyntheticaDETR model to use in the quickstart:

mkdir -p ${ISAAC_ROS_WS}/isaac_ros_assets/models/synthetica_detr && \ cd ${ISAAC_ROS_WS}/isaac_ros_assets/models/synthetica_detr && \ wget 'https://api.ngc.nvidia.com/v2/models/nvidia/isaac/synthetica_detr/versions/1.0.0/files/sdetr_grasp.etlt'

Note

This quickstart uses the NVIDIA-produced

sdetr_graspSyntheticaDETR model, but Isaac ROS RT-DETR is compatible with all RT-DETR architecture models. For more about the differences between SyntheticaDETR and RT-DETR, see here.

Build isaac_ros_rtdetr

Launch the Docker container using the

run_dev.shscript:cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Install the prebuilt Debian package:

sudo apt-get install -y ros-humble-isaac-ros-rtdetr

Clone this repository under

${ISAAC_ROS_WS}/src:cd ${ISAAC_ROS_WS}/src && \ git clone -b release-3.1 https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_object_detection.git isaac_ros_object_detection

Launch the Docker container using the

run_dev.shscript:cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Use

rosdepto install the package’s dependencies:rosdep install --from-paths ${ISAAC_ROS_WS}/src/isaac_ros_object_detection --ignore-src -y

Build the package from source:

cd ${ISAAC_ROS_WS} && \ colcon build --symlink-install --packages-up-to isaac_ros_rtdetr

Source the ROS workspace:

Note

Make sure to repeat this step in every terminal created inside the Docker container.

Since this package was built from source, the enclosing workspace must be sourced for ROS to be able to find the package’s contents.

source install/setup.bash

Run Launch File

Continuing inside the Docker container, convert the encrypted model (

.etlt) to a TensorRT engine plan:/opt/nvidia/tao/tao-converter -k sdetr -t fp16 -e ${ISAAC_ROS_WS}/isaac_ros_assets/models/synthetica_detr/sdetr_grasp.plan -p images,1x3x640x640,2x3x640x640,4x3x640x640 -p orig_target_sizes,1x2,2x2,4x2 ${ISAAC_ROS_WS}/isaac_ros_assets/models/synthetica_detr/sdetr_grasp.etlt

Note

The model conversion time varies across different platforms. On Jetson AGX Orin, for example, the engine conversion process can take up to 10-15 minutes to complete.

Continuing inside the Docker container, install the following dependencies:

sudo apt-get install -y ros-humble-isaac-ros-examples

Run the following launch file to spin up a demo of this package using the quickstart rosbag:

ros2 launch isaac_ros_examples isaac_ros_examples.launch.py launch_fragments:=rtdetr interface_specs_file:=${ISAAC_ROS_WS}/isaac_ros_assets/isaac_ros_rtdetr/quickstart_interface_specs.json engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/synthetica_detr/sdetr_grasp.plan

Open a second terminal inside the Docker container:

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Run the rosbag file to simulate an image stream:

ros2 bag play -l ${ISAAC_ROS_WS}/isaac_ros_assets/isaac_ros_rtdetr/quickstart.bag

Ensure that you have already set up your RealSense camera using the RealSense setup tutorial. If you have not, please set up the sensor and then restart this quickstart from the beginning.

Continuing inside the Docker container, install the following dependencies:

sudo apt-get install -y ros-humble-isaac-ros-examples ros-humble-isaac-ros-realsense

Run the following launch file to spin up a demo of this package using a RealSense camera:

ros2 launch isaac_ros_examples isaac_ros_examples.launch.py launch_fragments:=realsense_mono_rect,rtdetr engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/synthetica_detr/sdetr_grasp.plan

Note

The sdetr_grasp model used in this quickstart is only trained on a specific subset of objects.

To run the quickstart with a live camera stream, you will need to procure and present one of the specific objects

listed in the appendix of the SyntheticaDETR model card.

Ensure that you have already set up your Hawk camera using the Hawk setup tutorial. If you have not, please set up the sensor and then restart this quickstart from the beginning.

Continuing inside the Docker container, install the following dependencies:

sudo apt-get install -y ros-humble-isaac-ros-examples ros-humble-isaac-ros-argus-camera

Run the following launch file to spin up a demo of this package using a Hawk camera:

ros2 launch isaac_ros_examples isaac_ros_examples.launch.py launch_fragments:=argus_mono,rectify_mono,rtdetr engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/synthetica_detr/sdetr_grasp.plan

Note

The sdetr_grasp model used in this quickstart is only trained on a specific subset of objects.

To run the quickstart with a live camera stream, you will need to procure and present one of the specific objects

listed in the appendix of the SyntheticaDETR model card.

Ensure that you have already set up your ZED camera using ZED setup tutorial.

Continuing inside the Docker container, install dependencies:

sudo apt-get install -y ros-humble-isaac-ros-examples ros-humble-isaac-ros-image-proc ros-humble-isaac-ros-zed

Run the following launch file to spin up a demo of this package using a ZED Camera:

ros2 launch isaac_ros_examples isaac_ros_examples.launch.py \ launch_fragments:=zed_mono_rect,rtdetr engine_file_path:=${ISAAC_ROS_WS}/isaac_ros_assets/models/synthetica_detr/sdetr_grasp.plan \ interface_specs_file:=${ISAAC_ROS_WS}/isaac_ros_assets/isaac_ros_rtdetr/zed2_quickstart_interface_specs.json

Note

If you are using the ZED X series, replace zed2_quickstart_interface_specs.json with zedx_quickstart_interface_specs.json in the above command.

Note

The sdetr_grasp model used in this quickstart is only trained on a specific subset of objects.

To run the quickstart with a live camera stream, you will need to procure and present one of the specific objects

listed in the appendix of the SyntheticaDETR model card.

Visualize Results

Open a new terminal inside the Docker container:

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Run the RT-DETR visualization script:

ros2 run isaac_ros_rtdetr isaac_ros_rtdetr_visualizer.py

Open another terminal inside the Docker container:

cd ${ISAAC_ROS_WS}/src/isaac_ros_common && \ ./scripts/run_dev.sh

Visualize and validate the output of the package with

rqt_image_view:ros2 run rqt_image_view rqt_image_view /rtdetr_processed_image

After about 1 minute, your output should look like this:

Try More Examples

To continue your exploration, check out the following suggested examples:

This package only supports models based on the RT-DETR architecture. Some of the supported RT-DETR models from NGC:

Model Name |

Use Case |

|---|---|

Model trained on 100% synthetic data for object classes that can be grasped by a standard robot arm |

|

Model trained on 100% synthetic data for object classes that are relevant to the operation of an Autonomous Mobile Robot |

To learn how to use these models, click here.

Troubleshooting

Isaac ROS Troubleshooting

For solutions to problems with Isaac ROS, see troubleshooting.

Deep Learning Troubleshooting

For solutions to problems with using DNN models, see troubleshooting deeplearning.

API

Usage

ros2 launch isaac_ros_rtdetr isaac_ros_rtdetr.launch.py model_file_path:=<path to .onnx> engine_file_path:=<path to .plan> input_tensor_names:=<input tensor names> input_binding_names:=<input binding names> output_tensor_names:=<output tensor names> output_binding_names:=<output binding names> verbose:=<TensorRT verbosity> force_engine_update:=<force TensorRT update>

RtDetrPreprocessorNode

ROS Parameters

ROS Parameter |

Type |

Default |

Description |

|---|---|---|---|

|

|

|

The name of the encoded image tensor binding in the input tensor list. |

|

|

|

The name of the encoded image tensor binding in the output tensor list. |

|

|

|

The name of the target image size tensor binding in the output tensor list. |

|

|

|

The height of the original image, for resizing the final bounding box to match the original dimensions. |

|

|

|

The width of the original image, for resizing the final bounding box to match the original dimensions. |

ROS Topics Subscribed

ROS Topic |

Interface |

Description |

|---|---|---|

|

The tensor that contains the encoded image data. |

ROS Topics Published

ROS Topic |

Interface |

Description |

|---|---|---|

|

Tensor list containing encoded image data and image size tensors. |

RtDetrDecoderNode

ROS Parameters

ROS Parameter |

Type |

Default |

Description |

|---|---|---|---|

|

|

|

The name of the labels tensor binding in the input tensor list. |

|

|

|

The name of the boxes tensor binding in the input tensor list. |

|

|

|

The name of the scores tensor binding in the input tensor list. |

|

|

|

The minimum score required for a particular bounding box to be published. |

ROS Topics Subscribed

ROS Topic |

Interface |

Description |

|---|---|---|

|

The tensor that represents the inferred aligned bounding boxes, labels, and scores. |

ROS Topics Published

ROS Topic |

Interface |

Description |

|---|---|---|

|

Aligned image bounding boxes with detection class |