Isaac ROS DNN Stereo Depth

Overview

The vision depth perception problem is generally useful in many fields of robotics such as estimating the pose of a robotic arm in an object manipulation task, estimating distance of static or moving targets in autonomous robot navigation, tracking targets in delivery robots and so on. Isaac ROS DNN Stereo Depth is targeted at two Isaac applications, Isaac Manipulator and Isaac Perceptor. In Isaac Manipulator application, ESS is deployed in Isaac ROS cuMotion package as a plug-in node to provide depth perception maps for robot arm motion planning and control. In this scenario, multi-camera stereo streams of industrial robot arms on a table task are passed to ESS to obtain corresponding depth streams. The depth streams are used to segment the relative distance of robot arms from corresponding objects on the table; thus providing signals for collision avoidance and fine-grain control. Similarly, the Isaac Perceptor application uses several Isaac ROS packages, namely, Isaac ROS Nova, Isaac ROS Visual Slam, Isaac ROS Stereo Depth (ESS), Isaac ROS Nvblox and Isaac ROS Image Pipeline.

ESS is deployed in Isaac Perceptor to enable Nvblox to create 3D voxelized images of the robot surroundings. Specifically, the Nova developer suite provides 3x stereo-camera streams to Isaac Perceptor. Each stream corresponds to the front, left, and right cameras. In both Isaac Manipulator and Isaac Perceptor, a camera-specific image processing pipeline consisting of GPU-accelerated operations, provides rectification and undistortion of the input stereo images. All stereo stream image pair are time synchronized before before passing them to ESS. ESS node outputs corresponding depth maps for all three preprocessed image streams and combines the depth images with motion signals provided by cuVSLAM module. The combined depth and motion integrated signals are fed to Nvblox module to produce a dense 3D volumetric scene reconstruction of the surrounding scene.

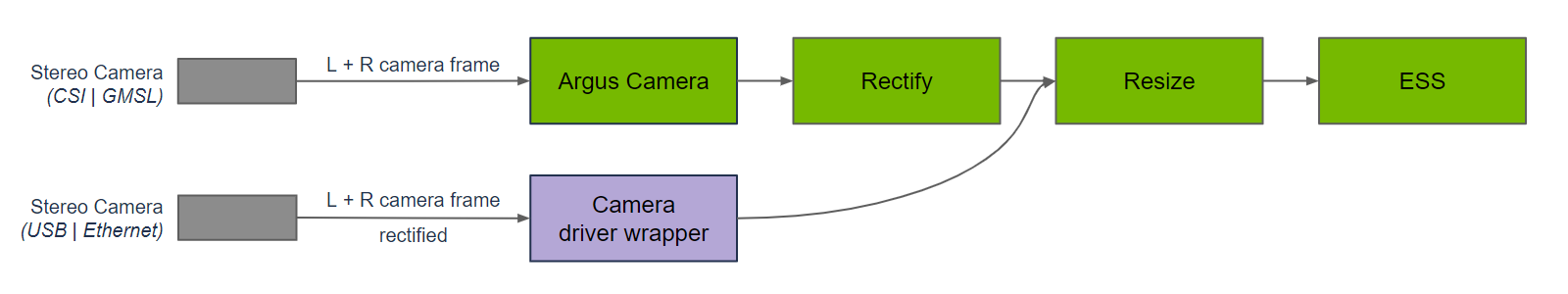

Above, ESS node is used in a graph of nodes to provide a disparity prediction from an input left and right stereo image pair. The rectify and resize nodes pre-process the left and right frames to the appropriate resolution. The aspect ratio of the image is recommended to be maintained to avoid degrading the depth output quality. The graph for DNN encode, DNN inference, and DNN decode is included in the ESS node. Inference is performed using TensorRT, as the ESS DNN model is designed with optimizations supported by TensorRT.

Quickstarts

Isaac ROS NITROS Acceleration

This package is powered by NVIDIA Isaac Transport for ROS (NITROS), which leverages type adaptation and negotiation to optimize message formats and dramatically accelerate communication between participating nodes.

ESS DNN

ESS stands for Efficient Semi-Supervised stereo disparity, developed by NVIDIA.

The ESS DNN is used to predict the disparity for each pixel from stereo camera image pairs.

This network has improvements over classic CV approaches that use epipolar geometry to compute disparity,

as the DNN can learn to predict disparity in cases where epipolar geometry feature matching fails.

The semi-supervised learning and stereo disparity matching makes the ESS DNN robust in environments unseen in

the training datasets and with occluded objects. This DNN is optimized for and

evaluated with color (RGB) global shutter stereo camera images, and accuracy may vary with

monochrome stereo images used in analytic computer vision approaches to stereo disparity.

The predicted disparity values

represent the distance a point moves from one image to the other

in a stereo image pair (a.k.a. the binocular image pair). The disparity is inversely proportional

to the depth (i.e. disparity = focalLength x baseline / depth). Given the

focal length and baseline

of the camera that generates a stereo image pair, the predicted disparity map from the isaac_ros_ess package

can be used to compute depth and generate a point cloud.

Note

Compare the requirements of your use case against the package input limitations.

DNN Models

An ESS model is required to run isaac_ros_ess.

NGC provides pre-trained models for use in your robotics application.

ESS models are available on NGC, providing robust

depth estimation.

Click here for more information on how to use NGC models.

Confidence and Density

ESS DNN provides two outputs: disparity estimation and confidence estimation.

The disparity output can be filtered, by thresholding the confidence output,

to trade-off between confidence and density.

isaac_ros_ess filters out pixels with low confidence by setting:

disparity[confidence < threshold] = -1 # -1 means invalid

The choice of threshold value is dependent on use case.

Resolution and Performance

NGC Provides ESS and Light ESS models for trade-off between resolution and performance. A detailed comparison of the two models can be found here.

Model Name |

Disparity Resolution |

|---|---|

ESS |

Estimate disparity at 1/4 HD resolution |

Light ESS |

Estimate disparity at 1/16 HD resolution |

ESS DNN Plugins

ESS TensorRT custom plugins are used to optimize the ESS DNN for inference on NVIDIA GPUs. The plugins work with custom layers added in ESS model and they are not natively supported by TensorRT. ESS prebuilt plugins are available for Jetson Orin and x84_86 platforms The plugins are used during the conversion of the ESS model to TensorRT engine plan, as well as during TensorRT inference. ESS TensorRT custom plugins are available for download from NGC together with ESS models.

Packages

Supported Platforms

This package is designed and tested to be compatible with ROS 2 Humble running on Jetson or an x86_64 system with an NVIDIA GPU.

Note

Versions of ROS 2 other than Humble are not supported. This package depends on specific ROS 2 implementation features that were introduced beginning with the Humble release. ROS 2 versions after Humble have not yet been tested.

Platform |

Hardware |

Software |

Notes |

|---|---|---|---|

Jetson |

For best performance, ensure that power settings are configured appropriately. Jetson Orin Nano 4GB may not have enough memory to run many of the Isaac ROS packages and is not recommended. |

||

x86_64 |

|

Docker

To simplify development, we strongly recommend leveraging the Isaac ROS Dev Docker images by following these steps. This will streamline your development environment setup with the correct versions of dependencies on both Jetson and x86_64 platforms.

Note

All Isaac ROS Quickstarts, tutorials, and examples have been designed with the Isaac ROS Docker images as a prerequisite.

Customize your Dev Environment

To customize your development environment, reference this guide.

Updates

Date |

Changes |

|---|---|

2024-09-26 |

Update for ZED compatibility |

2024-05-30 |

Updated for ESS 4.0 with fused kernel plugins |

2023-10-18 |

Updated for ESS 3.0 with confidence thresholding in multiple resolutions |

2023-05-25 |

Upgraded model (1.1.0) |

2023-04-05 |

Source available GXF extensions |

2022-10-19 |

Updated OSS licensing |

2022-08-31 |

Update to be compatible with JetPack 5.0.2 |

2022-06-30 |

Initial release |